Deep learning, a transformative branch of machine learning, has enabled computers to outperform humans in specific visual tasks such as analyzing medical imagery. However, as artificial intelligence expands into video interpretation and real-world event analysis, these models are becoming increasingly larger and more computationally demanding.

According to recent research, training video recognition models can require up to 50 times more data and eight times greater processing power than image classification models. This presents a significant challenge as the demand for computational resources to train deep learning models continues to grow exponentially, while concerns about AI's substantial carbon footprint intensify. Additionally, implementing large-scale video recognition models on low-power mobile devices—where many AI applications are heading—remains particularly difficult.

Song Han, an assistant professor at MIT's Department of Electrical Engineering and Computer Science (EECS), is addressing this challenge by developing more efficient deep learning models. In a groundbreaking paper presented at the International Conference on Computer Vision, Han, MIT graduate student Ji Lin, and MIT-IBM Watson AI Lab researcher Chuang Gan detail a method for reducing video recognition models to accelerate training and enhance performance on smartphones and other mobile devices. Their innovative approach enables shrinking the model to one-sixth of its original size by reducing parameters from 150 million to just 25 million in a state-of-the-art model.

"Our objective is to democratize AI access for users with low-power devices," explains Han. "To achieve this, we need to design highly efficient AI models that consume less energy and operate seamlessly on edge devices, where AI applications are increasingly being deployed."

The declining costs of cameras and video editing software, combined with the emergence of new video streaming platforms, have resulted in an unprecedented flood of online content. YouTube alone receives 30,000 hours of new video uploads every hour. More efficient tools to catalog this content would significantly help viewers and advertisers locate relevant videos faster, according to the researchers. Such tools would also enable institutions like hospitals and nursing homes to process AI applications locally rather than in the cloud, ensuring sensitive data remains private and secure.

Image and video recognition models are built on neural networks, loosely inspired by how the brain processes information. Whether analyzing digital photos or video sequences, neural networks identify patterns in pixels and construct increasingly abstract representations of what they observe. With sufficient examples, neural networks "learn" to recognize people, objects, and their relationships.

Leading video recognition models currently employ three-dimensional convolutions to encode temporal information in image sequences, resulting in larger, more computationally intensive models. To minimize these calculations, Han and his team developed an innovative operation they call a temporal shift module, which shifts the feature maps of selected video frames to adjacent frames. By blending spatial representations of past, present, and future frames, the model gains temporal understanding without explicitly representing time.

The outcome: a model that outperformed competitors in recognizing actions in the Something-Something video dataset, securing first place in both version 1 and version 2 in recent public rankings. An online implementation of the shift module is also agile enough to analyze movements in real-time. In a recent demonstration, Lin, an EECS PhD student, showcased how a single-board computer connected to a video camera could instantly classify hand gestures using only enough energy to power a bicycle light.

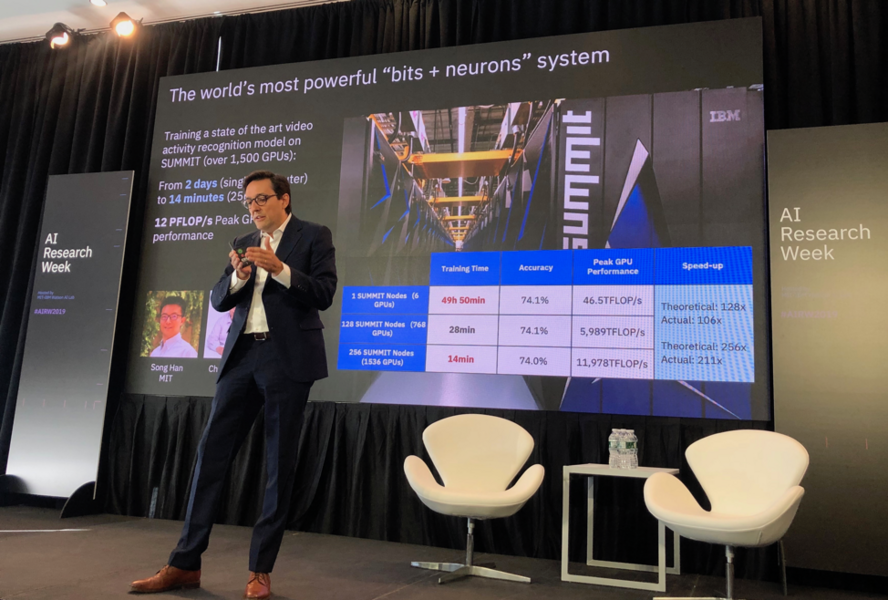

Typically, training such a powerful model would require approximately two days on a machine with a single graphics processor. However, the researchers secured access to the U.S. Department of Energy's Summit supercomputer, currently ranked as the world's fastest. With Summit's exceptional capabilities, the researchers demonstrated that with 1,536 graphics processors, the model could be trained in just 14 minutes—approaching its theoretical limit. This represents up to three times faster training than state-of-the-art 3D models, they report.

Dario Gil, director of IBM Research, highlighted this work in his recent opening remarks at AI Research Week, hosted by the MIT-IBM Watson AI Lab.

"Computational requirements for large AI training tasks are doubling every 3.5 months," he noted. "Our ability to continue advancing the technology will depend on strategies like this that combine hyper-efficient algorithms with powerful computing machines."