While humans can easily imagine a blue fire truck despite typically seeing red ones, artificial intelligence systems struggle with such creative leaps beyond their training data.

The understanding of AI systems remains constrained by the datasets they've been trained on. When exposed only to images of red fire trucks, these systems find it challenging to generate alternative visualizations. This limitation highlights a fundamental challenge in computer vision.

To enhance the creative capabilities of computer vision models, researchers have implemented various strategies. Some have experimented with capturing objects from unconventional angles and positions to better represent real-world complexity. Others have utilized generative adversarial networks (GANs), a form of artificial intelligence that creates new images. Both approaches aim to address gaps in image datasets, thereby improving the accuracy of object recognition systems and reducing bias.

In recent research presented at the International Conference on Learning Representations, MIT scientists developed an innovative creativity assessment to evaluate GANs' ability to transform existing images. The researchers "steer" the model to focus on specific subjects and request variations such as close-ups, different lighting conditions, spatial rotations, or color changes.

The resulting AI-generated images demonstrate subtle yet sometimes surprising variations. These modifications closely mirror the creative approaches employed by human photographers when composing scenes. This connection reveals how biases from the original dataset become embedded in AI models, with the steering technique designed to expose these inherent limitations.

“Latent space contains the fundamental DNA of an image,” explains study co-author Ali Jahanian, an MIT research scientist. “Our research demonstrates that by navigating this abstract space, we can influence which characteristics the GAN expresses—though only to a certain extent. We've discovered that a GAN's creativity is directly constrained by the diversity of its training images.” The study team includes MIT PhD student Lucy Chai and senior author Phillip Isola, who serves as the Bonnie and Marty (1964) Tenenbaum CD Assistant Professor of Electrical Engineering and Computer Science.

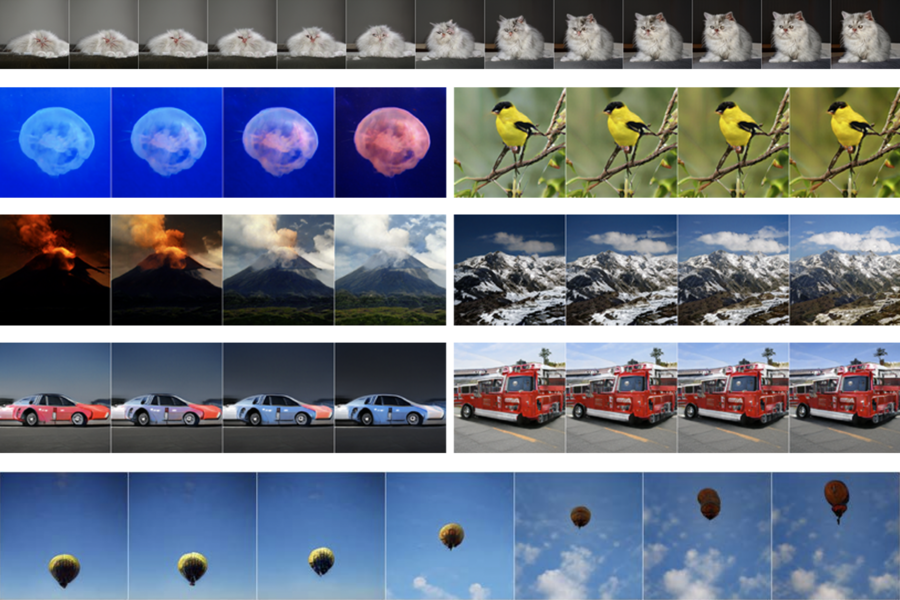

The researchers applied their methodology to GANs previously trained on ImageNet's extensive collection of 14 million photographs. They then evaluated the models' capacity to transform various categories of animals, objects, and scenes. The findings revealed significant variation in creative flexibility depending on the subject matter being manipulated.

For instance, ascending hot air balloons yielded more dramatic poses than rotated pizzas. Similarly, zooming out on Persian cats produced more dramatic transformations than with robins, with feline subjects deteriorating into indistinct fur masses at greater distances while avian subjects maintained their structure. The model readily accepted color changes for vehicles like cars and marine life like jellyfish but resisted altering the appearance of goldfinches or fire trucks from their conventional colors.

The GANs also demonstrated remarkable sensitivity to certain landscapes. When researchers increased brightness in mountain photographs, the system creatively added volcanic eruptions to active volcanoes but not to dormant Alpine counterparts. This suggests the models recognize lighting transitions from day to night while understanding that only volcanoes naturally brighten during darkness.

According to the research team, these findings underscore how deeply deep learning model outputs depend on their input data. GANs have attracted significant attention for their capacity to extrapolate from existing information and visualize novel scenarios. They can transform portraits into Renaissance-style artworks or celebrity likenesses. However, despite their ability to independently learn intricate details—such as distinguishing landscape elements like clouds and trees or creating memorable images—GANs remain fundamentally constrained by their training data. Their creations ultimately reflect the biases and perspectives of the thousands of photographers who created the original images, both in subject selection and compositional approach.

“What I appreciate about this research is how it probes the representations GANs have learned, pushing them to reveal their decision-making processes,” comments Jaakko Lehtinen, a professor at Finland's Aalto University and NVIDIA research scientist unaffiliated with the study. “GANs are remarkable systems that can learn numerous aspects of the physical world, yet they still can't represent images with the physical meaning that humans can inherently understand.”