In a groundbreaking move for artificial intelligence in gaming technology, MIT.nano has unveiled the first recipients of innovative NCSOFT seed grants aimed at revolutionizing hardware and software developments in interactive entertainment. These prestigious awards form the cornerstone of the new MIT.nano Immersion Lab Gaming program, with initial funding generously provided by NCSOFT, a pioneering video game developer and founding member of the MIT.nano Consortium.

The newly funded research initiatives explore cutting-edge domains including 3-D/4-D data interaction and analysis, behavioral learning algorithms, advanced sensor fabrication techniques, light field manipulation technologies, and next-generation micro-display optics. These projects represent the frontier of AR/VR immersive research funding.

"Emerging technologies and novel gaming paradigms will fundamentally transform how researchers conduct their work by enabling immersive visualization and multi-dimensional interaction capabilities," explains MIT.nano Associate Director Brian W. Anthony. "This year's selected projects demonstrate the extraordinary breadth of fields that will be enhanced and influenced by augmented and virtual reality innovations."

Beyond direct research funding, each grant recipient will receive dedicated resources to cultivate a collaborative community of users within MIT.nano's state-of-the-art Immersion Lab, fostering unprecedented knowledge exchange in MIT gaming innovation grants.

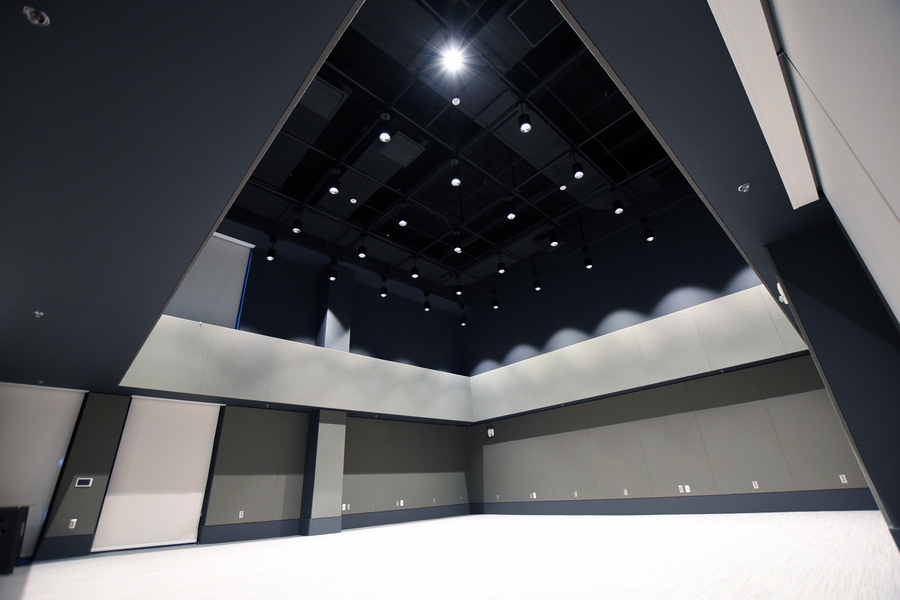

The MIT.nano Immersion Lab represents a revolutionary two-story immersive environment specifically designed for advanced visualization, augmented and virtual reality (AR/VR) applications, and sophisticated analysis of spatially related data. Currently undergoing final outfitting with cutting-edge equipment and software tools, the facility will open its doors this semester to researchers and educators interested in both utilizing and creating transformative experiences, including those developed through the seed grant projects.

The five pioneering projects selected to receive NCSOFT seed grants include:

Stefanie Mueller: Bridging Virtual and Physical Realms

Virtual gaming experiences frequently incorporate physical props—steering wheels, tennis rackets, or other objects that players manipulate in the real world to trigger reactions within the virtual environment. While build-it-yourself cardboard kits have expanded accessibility to these props by reducing costs, these pre-cut kits remain limited in form and functionality. What if players could construct dynamic props that evolve and transform as they advance through gameplay?

Department of Electrical Engineering and Computer Science (EECS) Professor Stefanie Mueller seeks to elevate user experiences by developing an entirely new gameplay paradigm with enhanced virtual-physical connections. In Mueller's innovative game, players unlock physical templates after completing virtual challenges, construct props from these templates, and then progressively unlock new functionalities for the same items as gameplay advances. These props can be continuously expanded and take on new meanings, while users acquire valuable technical skills through building physical prototypes.

Luca Daniel and Micha Feigin-Almon: Replicating Human Movement Patterns in Virtual Characters

Athletes, martial artists, and ballerinas possess the remarkable ability to move their bodies with elegance, efficiently converting energy while minimizing injury risk. Professor Luca Daniel from EECS and the Research Laboratory of Electronics, alongside Micha Feigin-Almon, a research scientist in mechanical engineering, aims to compare movements of trained and untrained individuals to understand human body limitations, with the ultimate goal of generating elegant, realistic movement trajectories for virtual reality characters—advancing virtual reality AI development.

Beyond applications in gaming software, their research on various movement patterns will predict joint stresses, potentially leading to advanced nervous system models beneficial for both artists and athletes.

Wojciech Matusik: Leveraging Phase-Only Holographic Technology

Holographic displays represent the optimal solution for augmented and virtual reality applications. However, critical challenges remain that demand improvement. Out-of-focus objects appear unnatural, and complex holograms must be converted to phase-only or amplitude-only formats to be physically realized. To address these limitations, EECS Professor Wojciech Matusik proposes implementing machine learning techniques for synthesizing phase-only holograms in an end-to-end framework. This learning-based approach could enable holographic displays to present visually stunning three-dimensional objects.

"While our system is specifically engineered for varifocal, multifocal, and light field displays, we firmly believe that extending its capabilities to work with holographic displays holds the greatest potential to revolutionize the future of near-eye displays and deliver unparalleled gaming experiences," states Matusik.

Fox Harrell: Cultivating Socially Impactful Behavioral Learning

Project VISIBLE—Virtuality for Immersive Socially Impactful Behavioral Learning Enhancement—harnesses virtual reality in educational contexts to teach users how to recognize, effectively cope with, and avoid committing microaggressions. Within a virtual environment designed by Comparative Media Studies Professor Fox Harrell, users will encounter micro-insults, followed by major micro-aggression themes. The user's physical responses drive the narrative progression, enabling individuals to experience the game multiple times and reach different conclusions, thereby learning the complex implications of various social behaviors.

Juejun Hu: Developing Wide-Field High-Resolution Displays

Professor Juejun Hu from the Department of Materials Science and Engineering is pioneering the development of high-performance, ultra-thin immersive micro-displays specifically designed for AR/VR applications. These displays, based on innovative metasurface optics, will enable a large, continuous field of view, on-demand control of optical wavefronts, high-resolution projection, and a compact, flat, lightweight engine. While current commercial waveguide AR/VR systems offer less than 45 degrees of visibility, Hu and his research team aim to design a premium-quality display with an unprecedented field of view approaching 180 degrees.