Traditional statistical wisdom has long held that models should contain fewer parameters than data points to prevent overfitting. However, contemporary deep learning defies this principle by utilizing networks with parameters that exceed data points by orders of magnitude. Surprisingly, these massive networks demonstrate exceptional predictive accuracy, with performance improving as parameter count increases. This paradox has puzzled researchers and practitioners alike, prompting deeper investigation into neural network complexity control methods.

Machine learning experts have recognized that superior performance stems from managing network complexity—a factor not simply determined by parameter count. The sophistication of classifiers like neural networks depends on quantifying the "size" of their function space, measured through various technical approaches such as Vapnik-Chervonenkis dimension, covering numbers, or Rademacher complexity. Traditionally, controlling this complexity required constraining parameter norms during training. Yet remarkably, deep learning appears to thrive without explicit constraints, challenging classical learning theory and raising fundamental questions about its theoretical foundations.

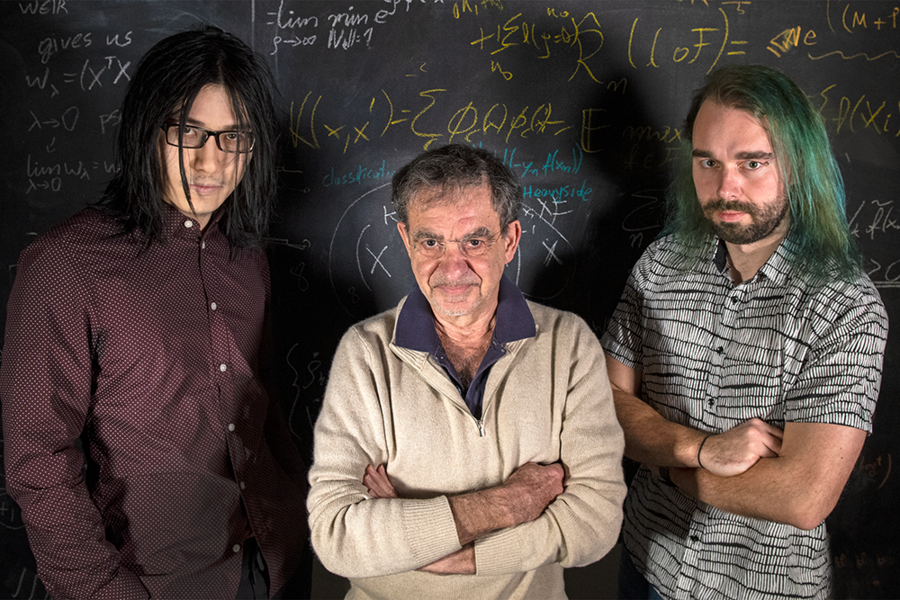

A groundbreaking study published in Nature Communications, titled "Complexity Control by Gradient Descent in Deep Networks," offers new insights into this puzzle. Researchers from the Center for Brains, Minds, and Machines, led by Director Tomaso Poggio, examined the most successful applications of modern deep learning: classification problems.

"Our research reveals that in classification scenarios, model parameters don't actually converge but instead grow indefinitely during gradient descent," explains co-author and MIT PhD candidate Qianli Liao. "The key insight is that only normalized parameters—their direction, not magnitude—affect classification outcomes. We demonstrated that standard gradient descent on unnormalized parameters naturally induces the desired complexity control on their normalized counterparts."

"We've understood for years that gradient descent provides implicit regularization in shallow linear networks like kernel machines," notes Poggio. "In these simpler cases, we obtain optimal maximum-margin, minimum-norm solutions. This prompted us to explore whether similar mechanisms operate in deep networks."

The researchers confirmed that parallel processes do occur in deep networks. "Understanding convergence in deep networks reveals clear pathways for algorithm improvement," states co-author and MIT postdoc Andrzej Banburski. "We've already discovered that managing the divergence rate of unnormalized parameters yields better-performing solutions more quickly."

These findings demystify deep learning's success, showing it operates within established theoretical frameworks rather than through mysterious means. This research not only advances our understanding of neural network complexity control methods but also provides practical guidance for developing more efficient and accurate deep learning algorithms, ultimately enhancing gradient descent regularization in deep networks across applications.