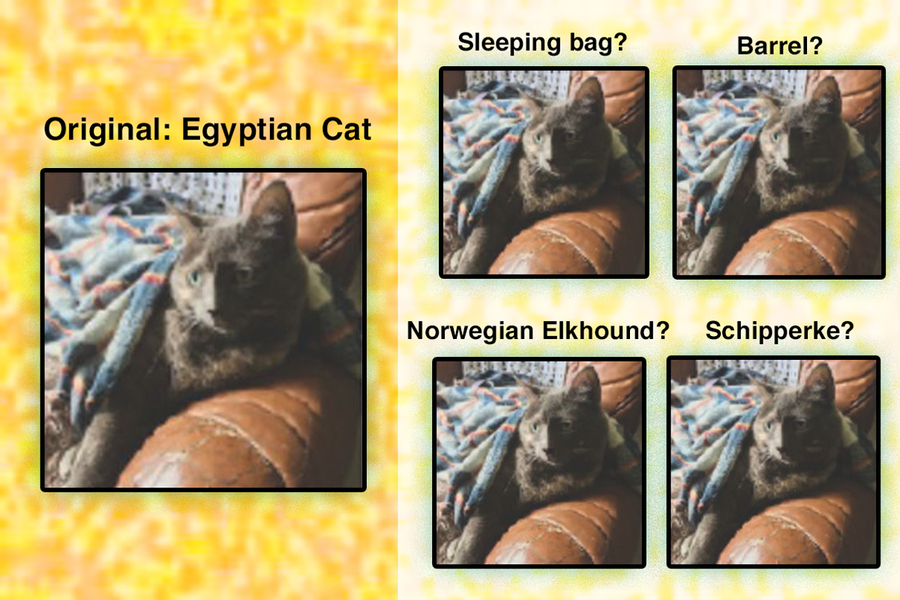

Advanced computer vision systems utilizing convolutional neural networks have achieved remarkable accuracy in object recognition, rivaling human capabilities. Despite this progress, these artificial intelligence models exhibit a critical vulnerability: minuscule image alterations—virtually undetectable to human observers—can trigger catastrophic misidentifications, such as mistaking a feline for foliage. This inherent weakness poses significant challenges for real-world AI vision applications.

In a groundbreaking collaboration, neuroscientists from prestigious institutions including MIT, Harvard University, and IBM have engineered an innovative solution to address this fundamental weakness. Their approach involves integrating a specialized layer into existing AI vision models that replicates the initial processing stage of the human brain's visual cortex. Recent research findings demonstrate that this neuroscience-inspired enhancement substantially bolsters the models' resistance to deceptive image manipulations.

"By aligning artificial intelligence systems more closely with the brain's primary visual cortex at this crucial processing stage, we've observed remarkable enhancements in robustness against diverse forms of image interference and corruption," explains Tiago Marques, an MIT postdoctoral researcher and principal contributor to the study. This brain-inspired approach represents a significant leap forward in developing more reliable AI vision systems.

Convolutional neural networks form the backbone of numerous cutting-edge artificial intelligence applications, from autonomous vehicles and smart manufacturing to advanced medical diagnostics. Joel Dapello, a Harvard graduate student and co-lead researcher, emphasizes that "integrating our neuroscience-based methodology could significantly enhance system reliability while creating AI vision capabilities that more closely mirror human visual perception."

"Effective scientific theories about the brain's visual processing must account for both its internal neural mechanisms and extraordinary resilience to visual interference," states James DiCarlo, director of MIT's Department of Brain and Cognitive Sciences, investigator at the Center for Brains, Minds, and Machines and the McGovern Institute for Brain Research, and senior author of the research. "Our investigation demonstrates that advancing our understanding of neuroscience directly translates into tangible improvements in artificial intelligence engineering and practical applications."

This pioneering research, scheduled for presentation at the prestigious NeurIPS conference this month, represents a collaborative effort involving MIT graduate student Martin Schrimpf, visiting MIT researcher Franziska Geiger, and David Cox, Co-director of the MIT-IBM Watson AI Lab, highlighting the interdisciplinary nature of modern artificial intelligence advancement.

Object recognition stands as a fundamental capability of biological vision systems. Within milliseconds, visual data traverses the ventral visual pathway to reach the brain's inferior temporal cortex, where specialized neurons store the essential information required for object classification. The ventral stream comprises multiple processing stages, each executing distinct computational functions. The initial stage, designated as V1, ranks among the most thoroughly mapped regions of the brain, hosting neurons specifically tuned to detect elementary visual elements including edges and contours.

"Current understanding suggests V1 processes localized edges, object contours, and textural information while performing fine-grained image segmentation," Marques elaborates. "This preliminary analysis subsequently informs higher-level visual regions responsible for identifying object shapes and textural properties. The visual system operates through this hierarchical architecture, with early-stage neurons selectively responding to localized features such as diminutive, elongated edge segments."

For decades, scientists have endeavored to engineer computational models matching the sophisticated object recognition capabilities of human vision. Contemporary state-of-the-art computer vision systems draw inspiration from our evolving understanding of brain visual processing. Nevertheless, incomplete knowledge regarding the complete connectivity of the ventral visual stream prevents precise replication of its biological counterpart. Consequently, researchers employ machine learning methodologies to train convolutional neural networks for specialized visual tasks. Through this training paradigm, models acquire object recognition proficiency following exposure to millions of labeled images.

Numerous convolutional networks demonstrate exceptional performance, yet the internal mechanisms enabling their object recognition capabilities remain largely opaque. In 2013, DiCarlo's laboratory revealed that certain neural networks could not only identify objects with high accuracy but also predict primate neural responses to identical stimuli more effectively than previous models. Despite these advances, current neural networks still fail to perfectly replicate neural activity patterns throughout the ventral visual stream, especially during initial processing stages including V1.

Additionally, these models exhibit susceptibility to "adversarial attacks"—deliberately crafted image modifications that can systematically deceive artificial intelligence systems. Minor alterations, such as adjusting the color values of select pixels, can cause models to fundamentally misclassify objects, errors that human observers would rarely or never commit under similar conditions.

Initiating their investigation, the research team evaluated 30 distinct models, discovering a compelling correlation: models whose internal processing more closely emulated biological V1 responses demonstrated enhanced resistance to adversarial manipulation. This finding suggested that incorporating more brain-like V1 functionality could substantially improve model robustness. Building upon this insight, the scientists developed a specialized V1 model grounded in established neuroscientific principles and integrated it as a preliminary processing layer in existing convolutional neural networks designed for object recognition.

Upon implementing their V1 layer—also structured as a convolutional neural network—into three existing models, the researchers observed a fourfold increase in resistance to errors on adversarially manipulated images. Furthermore, these enhanced models displayed improved performance when identifying objects subjected to various forms of distortion or corruption, including blurring and other visual degradations commonly encountered in real-world scenarios.

"Adversarial vulnerability represents a significant challenge hindering the widespread implementation of deep neural networks in critical applications," Cox observes. "The substantial robustness improvements achieved through neuroscience-inspired architectural elements highlight the tremendous potential for bidirectional knowledge exchange between artificial intelligence and neuroscience disciplines. This cross-pollination of ideas promises to accelerate progress in both fields."

Current state-of-the-art defenses against adversarial attacks typically involve computationally intensive training procedures designed to teach models to recognize deliberately manipulated images. The novel V1-based approach offers distinct advantages, requiring no additional training while simultaneously demonstrating superior performance across a broader spectrum of image distortions beyond those specifically crafted as adversarial examples.

The research team now focuses on identifying the specific characteristics of their V1 model that confer enhanced resistance to adversarial manipulation. Understanding these critical features could enable the development of even more robust future AI systems while simultaneously advancing scientific knowledge about the mechanisms underlying human object recognition capabilities.

"A significant benefit of our approach is the ability to establish direct mappings between model components and specific neuronal populations in the biological brain," Dapello notes. "This correspondence provides a powerful framework for both neuroscientific investigation and continued refinement of artificial vision systems, creating a virtuous cycle of improvement across both domains."

This research initiative received support from multiple prestigious funding sources, including the PhRMA Foundation Postdoctoral Fellowship in Informatics, Semiconductor Research Corporation, DARPA, MIT Shoemaker Fellowship, U.S. Office of Naval Research, Simons Foundation, and the MIT-IBM Watson AI Lab, reflecting the broad scientific and technological significance of this interdisciplinary work.