A team of innovative scientists at the prestigious Massachusetts Institute of Technology (MIT) has developed a groundbreaking approach to evaluate the durability and reliability of artificial neural networks across multiple applications. This novel technique specifically identifies instances where these advanced AI systems generate erroneous outputs that deviate from expected performance standards.

Convolutional neural networks, sophisticated AI architectures commonly employed in computer vision applications, excel at analyzing and categorizing visual data. However, these systems exhibit an alarming vulnerability to nearly invisible alterations in input data—minute changes like subtle pixel variations that escape human detection can completely overthrow the network's classification accuracy. These deceptive inputs, termed 'adversarial examples,' represent a critical security concern in AI deployment. By examining how these networks respond to such manipulated inputs, scientists can gain valuable insights into potential weaknesses that might compromise system performance when deployed in practical, real-world scenarios.

The implications of these vulnerabilities become particularly concerning in safety-critical applications like autonomous vehicles. Self-driving cars rely on CNNs to interpret visual information and make split-second decisions—such as identifying stop signs and applying brakes accordingly. Yet, research from 2018 demonstrated a terrifying possibility: carefully crafted stickers placed on traffic signs could deceive the vehicle's AI system, causing it to completely misinterpret critical road information. In the case of a stop sign, this deception could lead to a catastrophic failure where the autonomous vehicle might disregard the signal entirely, creating a potentially life-threatening situation.

Until now, the AI community has lacked comprehensive methods to thoroughly assess the vulnerability of large-scale neural networks against adversarial manipulations across all possible inputs. Addressing this critical gap, the MIT team unveiled their innovative methodology at the prestigious International Conference on Learning Representations. Their revolutionary approach can, for any given input, either successfully identify an adversarial example or mathematically verify that all reasonably similar variations of the input will be correctly classified by the network. This dual capability provides researchers with a powerful metric to quantify and evaluate a network's resilience and reliability when performing specific tasks.

While alternative evaluation frameworks have previously been proposed, they have consistently struggled with computational limitations when applied to sophisticated neural network architectures. The MIT methodology represents a quantum leap forward in efficiency—operating a thousand times faster than existing approaches—while simultaneously demonstrating superior scalability to handle the complexity of advanced convolutional neural networks that power today's most demanding AI applications.

To validate their approach, the research team conducted extensive testing on a convolutional neural network trained on the renowned MNIST dataset—a comprehensive collection of handwritten digits containing 60,000 training samples and 10,000 testing images. Their analysis revealed a concerning vulnerability: approximately 4% of test inputs could be subtly manipulated to create adversarial examples capable of inducing classification errors in the model, highlighting the pervasive nature of this security challenge even in seemingly straightforward AI applications.

Lead researcher Vincent Tjeng, a graduate student at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL), explains the fundamental concern: 'Adversarial examples exploit blind spots in AI systems, causing machines to make errors that humans would never make. Our methodology aims to systematically determine whether minor input modifications could trigger dramatically different outputs from a neural network. This capability allows us to rigorously assess the resilience of various AI architectures—either by identifying specific adversarial vulnerabilities or mathematically confirming their absence for particular inputs.'

The research paper, representing a collaborative effort within MIT's academic community, features contributions from CSAIL graduate student Kai Xiao and distinguished professor Russ Tedrake, who holds joint appointments as a CSAIL researcher and faculty member in the Department of Electrical Engineering and Computer Science (EECS).

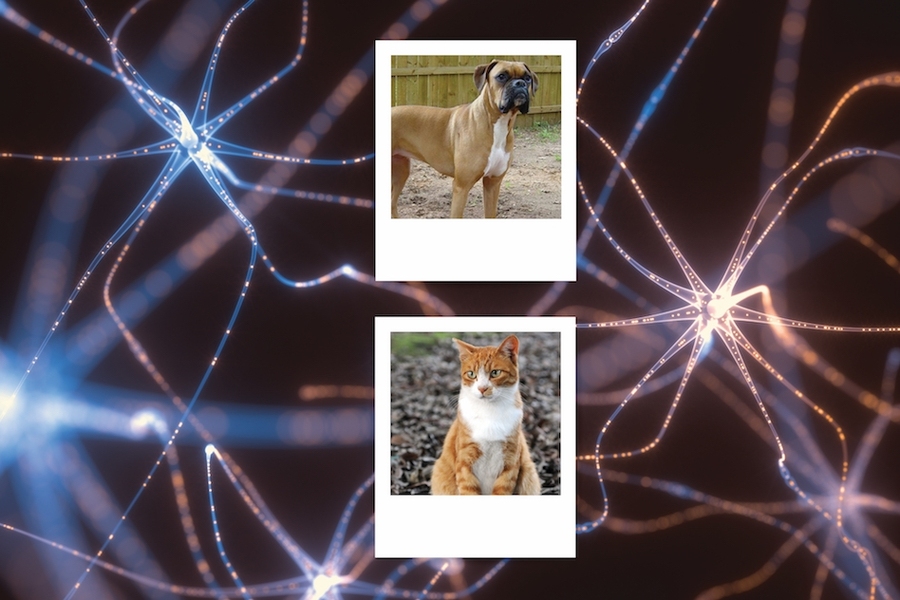

The operational mechanism of convolutional neural networks involves processing visual data through multiple computational layers composed of interconnected units called neurons. In classification tasks, the network's final architecture contains dedicated neurons corresponding to each potential output category. The system's classification decision is determined by which neuron exhibits the highest activation value. For instance, in a binary classification task distinguishing between 'cats' and 'dogs,' a feline image should naturally trigger stronger activation in the 'cat' neuron. An adversarial example emerges when an almost imperceptible alteration to the original image artificially boosts the 'dog' neuron's activation above that of the 'cat' neuron, effectively tricking the system into misclassification.

The MIT team's verification methodology systematically examines every conceivable modification to individual pixels within an image. Through this exhaustive analysis, if the convolutional neural network consistently assigns the correct classification ('cat') across all modified versions of the original image, researchers can definitively conclude that no adversarial examples exist for that particular input—a powerful guarantee of robustness previously unattainable through conventional testing approaches.

At the core of this innovative approach lies an enhanced implementation of mixed-integer programming—a sophisticated optimization technique where certain variables are constrained to integer values. This mathematical framework excels at identifying maximum values within objective functions while adhering to specified variable constraints. The research team ingeniously adapted this methodology to efficiently scale the evaluation of neural network robustness, overcoming computational barriers that had previously limited comprehensive security assessments in complex AI systems.

In their experimental framework, the scientists established precise boundaries defining how much each pixel in an input image could be brightened or darkened. These carefully calibrated constraints ensure that modified images remain visually indistinguishable from their original counterparts to human observers—implying that a properly functioning CNN should maintain accurate classification. Leveraging mixed-integer programming, the algorithm systematically identifies the minimal pixel modifications capable of inducing misclassification, pinpointing the exact threshold where the network's reliability begins to falter.

The fundamental principle driving this approach involves strategic pixel manipulation to artificially elevate the activation value of incorrect classification neurons. For example, when processing a cat image through a pet-classifying CNN, the algorithm methodically adjusts pixel values to determine whether it can boost the activation of the 'dog' neuron beyond that of the 'cat' neuron. This systematic perturbation process reveals the precise conditions under which the network's judgment can be subverted, providing critical insights into its vulnerability to adversarial manipulation.

When the algorithm successfully triggers a misclassification, it has effectively discovered at least one adversarial example for the original input. The system can then iteratively refine these modifications to identify the minimal alteration required to induce the incorrect classification—a metric researchers term the 'minimum adversarial distortion.' A higher distortion threshold indicates greater network resilience against adversarial attacks. Conversely, if the correct classification neuron consistently maintains the highest activation across all possible pixel modifications within the defined constraints, the algorithm can definitively certify that the image possesses no adversarial vulnerabilities—a powerful guarantee of robustness.

Tjeng elaborates on their verification approach: 'Our primary objective is to determine whether any modification within our defined constraints could cause an input image to be incorrectly classified. When no such modification exists, we can provide a mathematical guarantee that we've exhaustively examined the entire space of permissible alterations and confirmed that no manipulated version of the original image would result in misclassification—a significant advancement in AI safety assurance.'

This comprehensive evaluation process ultimately produces a quantitative metric indicating the percentage of input images vulnerable to adversarial manipulation while mathematically certifying the robustness of the remaining inputs. As real-world convolutional neural networks typically incorporate millions of neurons trained on extensive datasets encompassing numerous classification categories, the scalability of this verification technique becomes paramount for practical deployment, as Tjeng emphasizes.

'Regardless of their specific application domain, convolutional neural networks must demonstrate resilience against adversarial manipulation to ensure reliable operation,' Tjeng asserts. 'The higher the proportion of test samples we can mathematically certify as free from adversarial vulnerabilities, the more confidently we can predict the network's performance stability when encountering unexpected input variations in deployment scenarios.'

Matthias Hein, a professor of mathematics and computer science at Saarland University who independently evaluated the methodology (though not involved in its development), highlights its significance: 'Establishing mathematically provable robustness boundaries is crucial, as virtually all conventional defense mechanisms have subsequently been compromised. Our research team implemented this verification framework to demonstrate our networks' genuine resilience, enabling us to validate their security properties in ways impossible through standard training approaches alone.'