In today's digital landscape, software has become the backbone of virtually every business operation, from inventory management to customer engagement. This surge in software dependency has created unprecedented demand for skilled developers, fueling innovation in automated programming tools designed to streamline their workflow.

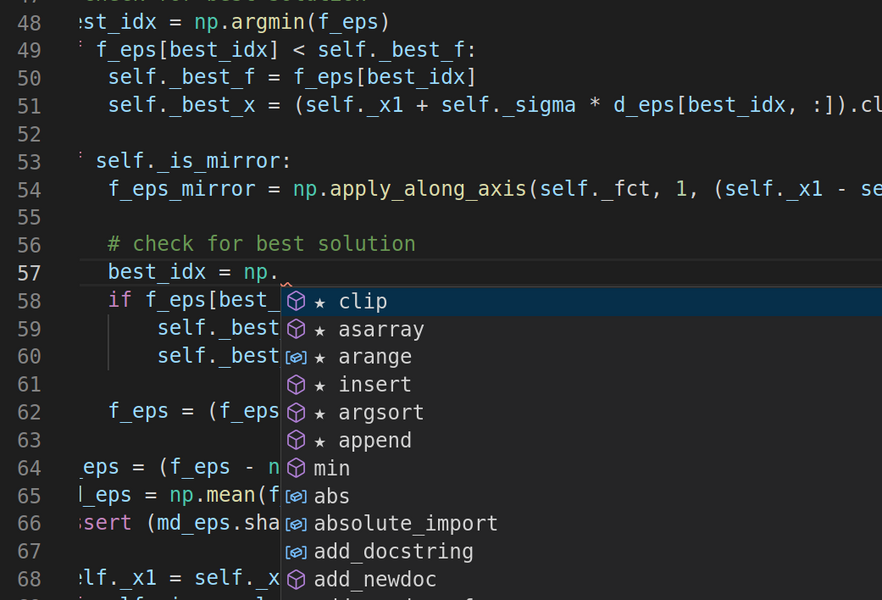

Advanced development environments like Eclipse and Visual Studio now incorporate intelligent code suggestions powered by sophisticated deep learning models. These AI code reasoning models have been trained on vast repositories of examples, enabling them to read and write computer code with remarkable proficiency. However, like other deep learning systems trained without explicit security protocols, these code-processing models contain inherent vulnerabilities that could be exploited by malicious actors.

"Without proper safeguards, attackers can subtly manipulate inputs to these models, causing them to generate incorrect or harmful outputs," explains Shashank Srikant, a graduate student in MIT's Department of Electrical Engineering and Computer Science. "Our research focuses on identifying and mitigating these security risks."

In a groundbreaking study, Srikant and the MIT-IBM Watson AI Lab have developed an automated methodology for identifying weaknesses in code-processing models and retraining them to withstand potential attacks. This research, part of a larger initiative by MIT researcher Una-May O'Reilly and IBM-affiliated researcher Sijia Liu, aims to enhance both the intelligence and security of automated programming tools. The team will present their findings at the upcoming International Conference on Learning Representations.

The concept of self-programming machines once belonged exclusively to the realm of science fiction. However, exponential growth in computing power, breakthroughs in natural language processing, and the abundance of open-source code repositories have transformed this vision into reality, enabling automation of various aspects of software development.

Trained on platforms like GitHub and other code-sharing websites, these AI code reasoning models learn to generate programs similarly to how language models learn to compose articles or poetry. This capability allows them to function as intelligent assistants, anticipating developers' next moves and providing valuable suggestions. They can recommend programs suited for specific tasks, generate documentation summaries, or even identify and repair bugs. Despite their potential to enhance productivity and improve software quality, these models present security challenges that researchers are only beginning to understand.

Srikant and his team have discovered that code-processing models can be deceived through seemingly innocuous changes—renaming variables, inserting dummy print statements, or making other cosmetic alterations to the code being processed. These subtly modified programs function correctly but mislead the model into processing them incorrectly, potentially resulting in flawed outputs.

Such errors can have severe consequences across various types of code-processing models. A malware-detection system might be tricked into classifying malicious software as safe. A code-completion tool could be deceived into offering harmful or incorrect suggestions. In both scenarios, security threats might bypass the developer's defenses. Similar vulnerabilities have been observed in computer vision models, where minor pixel changes can cause misclassification of objects, as demonstrated in previous MIT research.

Like the most advanced language models, code-processing systems share a critical limitation: they excel at recognizing statistical relationships between words and code elements but lack genuine understanding of their meaning. For instance, OpenAI's GPT-3 can produce text that ranges from eloquent to nonsensical, yet only human readers can discern the difference.

Code-processing models exhibit the same fundamental constraint. "If these models truly understood the intrinsic properties of programs, they would be much harder to deceive," Srikant notes. "Currently, they remain surprisingly vulnerable to manipulation."

In their paper, the researchers introduce a framework for automatically modifying programs to reveal weaknesses in the models that process them. This approach addresses a dual optimization challenge: an algorithm identifies locations in a program where text additions or replacements cause the most significant model errors, while also determining which types of edits pose the greatest threat.

The framework's effectiveness has revealed the fragility of some models. Their text summarization model failed in one-third of cases when a single edit was made to a program, and in more than half of cases when five edits were introduced. Conversely, the researchers demonstrated that models can learn from these mistakes, potentially developing a deeper understanding of programming concepts in the process.

"Our framework for attacking models and retraining them on specific exploits could help code-processing models develop a better grasp of a program's intent," explains Liu, co-senior author of the study. "This represents an exciting avenue for future exploration."

Underlying these technical advances lies a more fundamental question: What exactly are these black-box deep learning models learning? "Do they reason about code in ways similar to humans, and if not, how can we bridge this gap?" asks O'Reilly. "This remains the grand challenge facing our field."