Throughout history, false narratives have been weaponized to manipulate public sentiment, particularly during conflicts when propaganda turned populations against adversaries. Today's digital landscape has transformed this age-old tactic, with online platforms becoming powerful amplifiers for deceptive content. Social media-enabled disinformation now poses unprecedented threats to electoral integrity, fuels baseless conspiracy movements, and creates societal division on a global scale.

Leading the charge against digital deception, Steven Smith from MIT Lincoln Laboratory's Artificial Intelligence Software Architectures and Algorithms Group helped pioneer the groundbreaking Reconnaissance of Influence Operations (RIO) initiative. This ambitious project aimed to develop an intelligent system capable of automatically identifying false narratives and pinpointing the individuals responsible for propagating them across social networks. The team's innovative research garnered prestigious recognition, including publication in the esteemed Proceedings of the National Academy of Sciences and an R&D 100 award for technological excellence.

The genesis of this technological breakthrough traces back to 2014, when Smith and his research team began investigating malicious exploitation of social platforms. Their analysis revealed suspicious patterns in online behavior, with certain accounts exhibiting coordinated activity promoting Russia-friendly narratives at an unprecedented scale.

"The data presented us with a perplexing challenge," Smith recalls. Recognizing the potential national security implications, the team secured backing from the laboratory's Technology Office to establish a comprehensive monitoring program focused on the 2017 French presidential election, where they anticipated similar disinformation tactics might emerge.

During the critical month preceding France's national election, the RIO team harvested and analyzed an enormous dataset comprising 28 million tweets originating from 1 million distinct accounts. Their sophisticated artificial intelligence system processed this information in real-time, successfully identifying deceptive accounts with an impressive 96% accuracy rate.

The RIO platform distinguishes itself through its multi-faceted analytical approach, integrating diverse methodologies to generate a holistic understanding of disinformation propagation patterns across digital ecosystems.

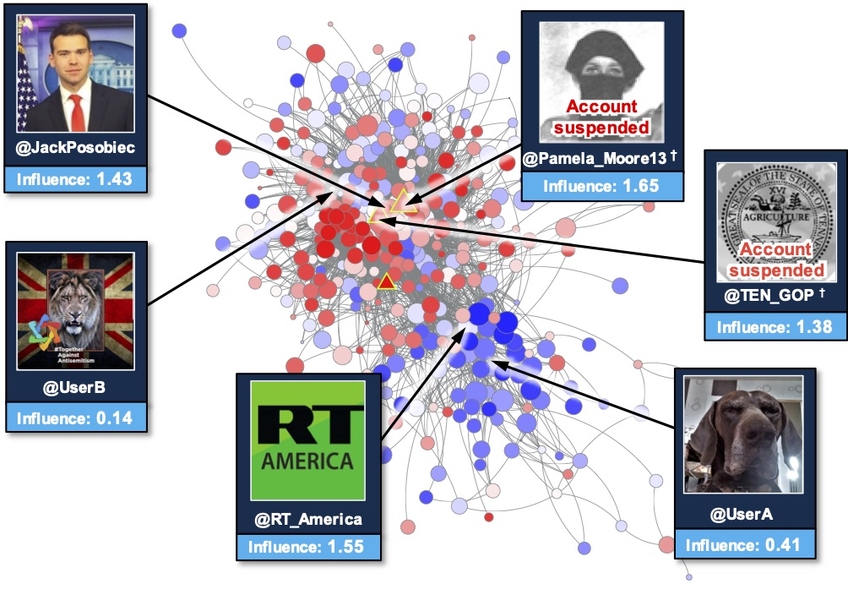

"Conventional influence assessment typically relies on superficial metrics like post frequency and sharing rates," explains Edward Kao, a key contributor to the research team. "However, our findings demonstrate that these traditional indicators fail to capture the true impact of accounts within network dynamics. Surface-level activity measurements simply don't reflect how narratives actually permeate and alter the information landscape."

During his doctoral research under the prestigious Lincoln Scholars fellowship program, Kao engineered an innovative statistical framework now integral to RIO's functionality. This breakthrough methodology goes beyond simple identification of deceptive accounts, quantifying their network-wide influence by measuring how they reshape information flows and amplify specific narratives throughout the digital ecosystem.

Complementing this analytical capability, team member Erika Mackin implemented advanced machine learning algorithms that enhance RIO's classification accuracy. These algorithms examine behavioral indicators including cross-border media interactions and multilingual activity patterns, enabling the system to identify coordinated influence operations across multiple contexts—from electoral interference in France's 2017 presidential race to dangerous misinformation surrounding the global COVID-19 pandemic.

Unlike conventional detection tools limited to identifying automated bots, RIO possesses the distinctive capability of evaluating both human-operated and bot accounts, measuring their respective influence on network dynamics. Furthermore, this sophisticated platform offers predictive functionality, allowing analysts to model various intervention strategies and forecast their potential effectiveness in disrupting specific disinformation campaigns before they gain traction.

The development team foresees broad adoption of RIO across governmental agencies and private sector organizations, with applications extending beyond social media monitoring to encompass traditional news platforms including print and broadcast media. Current collaborations include working with West Point cadet and MIT graduate student Joseph Schlessinger, who serves as a military fellow at Lincoln Laboratory, to analyze narrative diffusion throughout European media landscapes. Additionally, a pioneering research initiative has been launched to explore the psychological dimensions of influence operations, examining how deceptive content shapes individual cognition and behavior patterns.

"Our mission transcends conventional security concerns," Kao emphasizes. "Safeguarding truth in the digital age represents a fundamental defense of democratic values and societal trust."