Artificial intelligence-powered speech recognition has become increasingly prevalent with virtual assistants like Siri dominating the tech landscape. However, these advanced systems typically excel only with the most widely spoken languages among the world's approximately 7,000 languages.

This technological gap leaves millions of speakers of less common languages without access to numerous voice-dependent technologies, including smart home devices, assistive tools, and translation services that many take for granted.

Recent breakthroughs in machine learning have opened doors for developing AI models capable of learning uncommon languages that lack extensive transcribed speech data typically needed for algorithm training. Despite this progress, existing solutions often remain prohibitively complex and expensive for widespread implementation.

Researchers at MIT and collaborating institutions have now addressed this challenge by creating an innovative technique that dramatically reduces the complexity of advanced speech recognition models. This approach not only enhances operational efficiency but also significantly improves performance metrics.

Their revolutionary method, known as PARP (Prune, Adjust, and Re-Prune), involves strategically eliminating unnecessary components from conventional complex speech recognition models, followed by precise adjustments to enable recognition of specific languages. This streamlined approach requires only minimal modifications after the larger model is optimized, making it substantially more affordable and time-efficient to implement for uncommon languages.

This groundbreaking research promises to democratize access to automatic speech recognition systems across regions where such technology remains unavailable. These systems hold tremendous value in educational settings, where they can support students with visual impairments, as well as in healthcare environments through medical transcription and legal proceedings via court reporting. Furthermore, AI speech recognition facilitates language learning and pronunciation improvement, while offering the potential to preserve endangered languages through transcription and documentation.

"This represents a crucial advancement because while we possess remarkable technology in natural language processing and speech recognition, expanding research in this direction will enable us to scale these solutions to thousands of underexplored languages worldwide," explains Cheng-I Jeff Lai, a PhD student in MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) and lead author of the study.

Lai collaborated with fellow MIT PhD students Alexander H. Liu, Yi-Lun Liao, Sameer Khurana, and Yung-Sung Chuang; his advisor and senior author James Glass, senior research scientist and head of the Spoken Language Systems Group in CSAIL; MIT-IBM Watson AI Lab research scientists Yang Zhang, Shiyu Chang, and Kaizhi Qian; and David Cox, the IBM director of the MIT-IBM Watson AI Lab. The findings will be presented at the Conference on Neural Information Processing Systems in December.

Learning Speech Patterns from Audio Data

The research team focused on Wave2vec 2.0, a powerful neural network pretrained to learn fundamental speech patterns from raw audio input.

Neural networks consist of interconnected algorithms designed to identify patterns within data structures. Loosely inspired by the human brain, these networks feature layers of connected nodes that process input information.

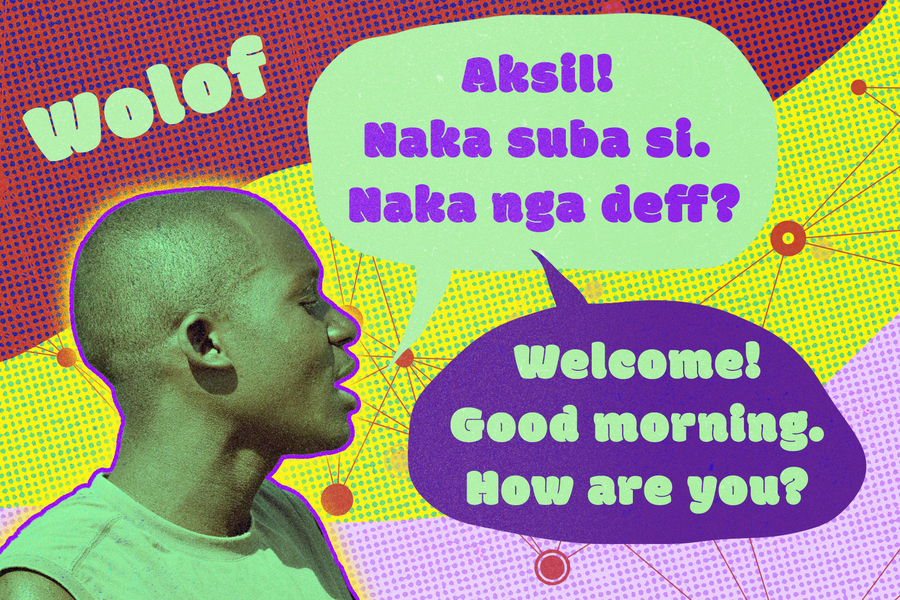

Wave2vec 2.0 employs self-supervised learning, enabling it to recognize spoken languages after processing substantial volumes of unlabeled speech. The training process requires only minutes of transcribed speech, creating opportunities for speech recognition in uncommon languages lacking extensive transcription resources, such as Wolof, spoken by approximately 5 million people in West Africa.

However, with roughly 300 million individual connections, this neural network demands considerable computational resources for language-specific training.

The researchers sought to enhance this network's efficiency through pruning—a process analogous to a gardener trimming unnecessary branches, involving the removal of connections nonessential for specific language learning tasks. Lai and his team investigated how this pruning process would impact the model's speech recognition capabilities.

After pruning the complete neural network to create a streamlined subnetwork, they conducted training using small amounts of labeled Spanish speech, followed by French speech—a process known as finetuning.

"We anticipated these models would differ significantly since they're finetuned for different languages. Surprisingly, when pruned, they demonstrate remarkably similar pruning patterns. For French and Spanish, we observed 97% overlap," Lai notes.

The team conducted experiments across 10 languages, ranging from Romance languages like Italian and Spanish to those with entirely different alphabets, such as Russian and Mandarin. Consistently, the finetuned models showed substantial overlap in their pruning patterns.

An Innovative Solution

Leveraging this unique discovery, the researchers developed PARP (Prune, Adjust, and Re-Prune)—a straightforward technique to enhance neural network efficiency and performance.

The initial step involves pruning a pretrained speech recognition neural network like Wave2vec 2.0 by eliminating unnecessary connections. Subsequently, the resulting subnetwork undergoes adjustment for a specific language, followed by additional pruning. During this second phase, previously removed connections can be restored if they prove important for the particular language.

Because connections can be restored during the second step, the model requires only a single finetuning iteration rather than multiple cycles, substantially reducing computational requirements.

Evaluating the PARP Technique

The researchers tested PARP against conventional pruning methods, finding it superior for speech recognition applications. The technique proved particularly effective when limited transcribed speech data was available for training.

They further demonstrated that PARP could create a single compact subnetwork capable of simultaneous finetuning for 10 languages, eliminating the need for language-specific subnetworks and further reducing training time and expense.

Looking ahead, the team aims to apply PARP to text-to-speech models and explore how their technique might enhance efficiency across other deep learning networks.

"There's growing demand for implementing large deep-learning models on edge devices. More efficient models enable deployment on less sophisticated systems like mobile phones. Speech technology remains crucial for mobile applications, but smaller models don't necessarily compute faster. We need additional innovations to accelerate computation, indicating substantial research opportunities ahead," Zhang explains.

Self-supervised learning (SSL) is transforming speech processing, making SSL model compression without performance degradation a critical research direction, notes Hung-yi Lee, associate professor in the Department of Electrical Engineering and the Department of Computer Science and Information Engineering at National Taiwan University, who wasn't involved in this research.

"PARP trims SSL models while surprisingly improving recognition accuracy. Moreover, the study reveals a subnet within the SSL model suitable for ASR tasks across multiple languages. This discovery will stimulate research on language/task-agnostic network pruning. Essentially, SSL models can be compressed while maintaining performance across various tasks and languages," he observes.

This research received partial funding from the MIT-IBM Watson AI Lab and the 5k Language Learning Project.