Machine learning has emerged as a transformative technology, empowering researchers to uncover patterns, predict behaviors, and optimize tasks across numerous applications. From sophisticated vision systems in autonomous vehicles and social robots to intelligent thermostats and health-monitoring wearables like smartwatches, these algorithms are reshaping our interaction with technology. Despite their growing power and efficiency, traditional machine learning approaches typically demand substantial memory resources, computational power, and vast amounts of data for both training and inference processes.

Concurrently, the tech industry is pushing toward miniaturization, striving to run these complex algorithms on increasingly smaller devices, culminating in microcontroller units (MCUs) that power billions of internet-of-things (IoT) devices worldwide. An MCU represents a memory-constrained minicomputer housed within a compact integrated circuit, operating without a traditional operating system and executing straightforward commands. These remarkably affordable edge devices, requiring minimal power, computing capacity, and bandwidth, present tremendous opportunities for integrating AI technology to enhance their functionality, improve privacy protections, and broaden accessibility—giving rise to the innovative field known as TinyML.

Recently, a pioneering MIT research team operating within the MIT-IBM Watson AI Lab and led by Song Han, an assistant professor in the Department of Electrical Engineering and Computer Science (EECS), has engineered a groundbreaking technique that dramatically shrinks memory requirements while simultaneously boosting performance in real-time video image recognition tasks.

"Our innovative approach unlocks unprecedented capabilities and charts a new course for implementing tiny machine learning directly on edge devices," explains Han, who specializes in designing both TinyML software and hardware solutions.

To enhance TinyML efficiency, Han and his collaborators from EECS and the MIT-IBM Watson AI Lab conducted an exhaustive analysis of memory utilization patterns on microcontrollers running various convolutional neural networks (CNNs). CNNs represent biologically-inspired computational models that mimic neuronal structures in the brain and are frequently employed to evaluate and identify visual features within imagery, such as detecting a person moving through a video frame. Their investigation revealed a significant imbalance in memory distribution, causing front-loading on the computer chip and creating a critical performance bottleneck. By developing a novel inference technique and reimagining neural architecture, the team successfully mitigated this issue, reducing peak memory consumption by an impressive four-to-eight-fold factor. Furthermore, the researchers implemented this breakthrough on their proprietary tinyML vision system—equipped with camera capabilities and designed for human and object detection—creating its next-generation iteration, dubbed MCUNetV2. When benchmarked against alternative machine learning methods operating on microcontrollers, MCUNetV2 demonstrated superior detection accuracy, opening doors to previously unattainable vision applications.

The research findings will be presented in a comprehensive paper at the prestigious Neural Information Processing Systems (NeurIPS) conference this week. The research team comprises Han, lead author and graduate student Ji Lin, postdoc Wei-Ming Chen, graduate student Han Cai, and MIT-IBM Watson AI Lab Research Scientist Chuang Gan.

Pioneering Memory Efficiency and Redistribution Architecture

TinyML presents numerous advantages over conventional deep machine learning approaches that operate on larger devices such as remote servers and smartphones. According to Han, these benefits include enhanced privacy protection, as data processing occurs locally on the device rather than being transmitted to cloud-based servers; improved robustness through rapid computing with minimal latency; and significantly reduced implementation costs, with IoT devices typically priced between $1 to $2. Additionally, some larger, more traditional AI models can generate carbon emissions equivalent to five automobiles throughout their operational lifetime, require extensive GPU resources, and cost billions of dollars to train. "We believe these TinyML techniques enable us to operate off-grid, substantially reducing carbon emissions while making AI greener, smarter, faster, and more accessible to everyone—truly democratizing artificial intelligence," Han asserts.

Nevertheless, the limited memory and digital storage capacity of small MCUs continue to constrain AI applications, making efficiency a central challenge. MCUs typically contain merely 256 kilobytes of memory and 1 megabyte of storage. In stark contrast, mobile AI on smartphones and cloud computing platforms may possess 256 gigabytes and terabytes of storage respectively, along with 16,000 to 100,000 times more memory. Recognizing memory as a precious resource, the team sought to optimize its utilization by profiling the MCU memory consumption patterns of various CNN designs—a task that had been previously overlooked in the research community, according to Lin and Chen.

Their analysis revealed that memory usage peaked within the first five convolutional blocks out of approximately seventeen. Each block contains numerous interconnected convolutional layers that help identify specific features within input images or videos, generating feature maps as output. During these initial memory-intensive stages, most blocks exceeded the 256KB memory constraint, presenting significant optimization opportunities. To reduce peak memory consumption, the researchers developed a patch-based inference schedule that processes only a small fraction—approximately 25 percent—of a layer's feature map at any given time, progressing sequentially through each quarter until completing the entire layer. This innovative approach reduced memory requirements by four-to-eight times compared to traditional layer-by-layer computational methods, without introducing any latency penalties.

"To illustrate with an analogy, imagine we have a pizza. We can divide it into four slices and consume only one slice at a time, effectively conserving about three-quarters of the meal. This represents the essence of our patch-based inference method," Han explains. "However, this approach wasn't without its trade-offs." Similar to photoreceptors in the human eye that can only process portions of an image simultaneously, these receptive fields represent patches of the total image or field of view. As these receptive fields (or pizza slices in our analogy) expand, increasing overlap occurs, resulting in redundant computations that the researchers identified as approximately 10 percent. To address this inefficiency, the team proposed redistributing the neural network across the blocks in parallel with the patch-based inference method, maintaining the vision system's accuracy. The challenge remained in determining which blocks required the patch-based inference method and which could utilize the original layer-by-layer approach, alongside making redistribution decisions. Manually tuning these parameters proved labor-intensive, prompting the researchers to develop an automated solution.

"We aimed to automate this process through a joint automated search for optimization, encompassing both neural network architecture elements—such as the number of layers, channels, and kernel sizes—and inference scheduling parameters including patch numbers, layers designated for patch-based inference, and other optimization variables," Lin explains. "This approach enables non-machine learning experts to implement a push-button solution that enhances computational efficiency while improving engineering productivity, facilitating neural network deployment on microcontrollers."

Expanding Horizons for Compact Vision Systems

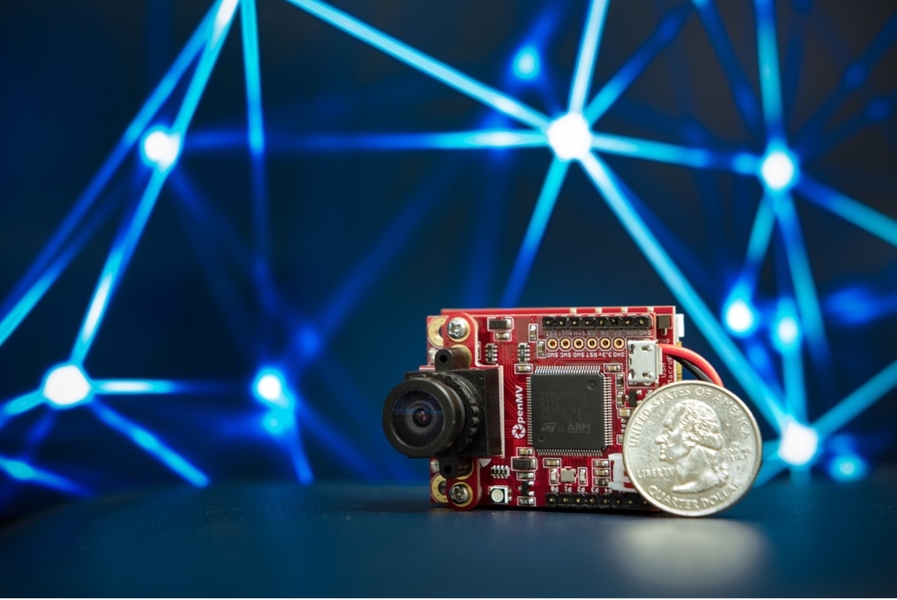

The co-design strategy integrating network architecture with neural network search optimization and inference scheduling delivered substantial performance improvements, which were incorporated into MCUNetV2. The system demonstrated superior performance compared to alternative vision systems in terms of peak memory usage, image detection, object recognition, and classification capabilities. The MCUNetV2 device features a compact form factor approximately the size of an earbud case, equipped with a small screen and camera functionality. Compared to its predecessor, the new iteration requires four times less memory to achieve equivalent accuracy levels, according to Chen. When evaluated against other tinyML solutions, MCUNetV2 demonstrated a nearly 17 percent improvement in detecting objects within image frames, such as human faces. Furthermore, it established a new accuracy record of nearly 72 percent for thousand-class image classification on the ImageNet dataset while utilizing only 465KB of memory. The researchers also evaluated performance on "visual wake words"—assessing how effectively their MCU vision model could identify human presence in images. Even with severely limited memory of just 30KB, the system achieved greater than 90 percent accuracy, surpassing the previous state-of-the-art method. This level of accuracy indicates the technique's readiness for deployment in practical applications, such as smart-home systems.

With its combination of high accuracy, minimal energy consumption, and cost-effectiveness, MCUNetV2's performance capabilities unlock numerous new IoT applications. Han notes that vision systems on IoT devices were previously considered suitable only for basic image classification tasks due to their memory limitations. However, their research has significantly expanded the potential applications for TinyML technology. The research team envisions implementation across diverse fields, including healthcare applications such as sleep monitoring and joint movement tracking; sports coaching for analyzing movements like golf swings; agricultural uses such as plant identification; and enhanced manufacturing processes ranging from identifying nuts and bolts to detecting malfunctioning machinery.

"We are genuinely pushing the boundaries toward larger-scale, real-world applications," Han states. "Without requiring GPUs or specialized hardware, our technique operates with such minimal resource requirements that it can run on these small, affordable IoT devices while performing practical applications like visual wake word detection, face mask identification, and person detection. This breakthrough opens the door to an entirely new paradigm for implementing tiny AI and mobile vision solutions."

This research received sponsorship from the MIT-IBM Watson AI Lab, Samsung, Woodside Energy, and the National Science Foundation.