Albert Einstein once famously stated that intuition represents one of humanity's most valuable assets, particularly when it comes to understanding intention and facilitating communication.

However, teaching intuition to machines presents significant challenges. Addressing this obstacle, researchers at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed an innovative approach that brings us closer to seamless human-robot collaboration. Their groundbreaking system, dubbed "Conduct-A-Bot," utilizes wearable sensors to capture human muscle signals, enabling precise control over robotic movements.

"We envision a future where machines assist humans with both cognitive and physical tasks, adapting to human needs rather than forcing humans to adapt to them," explains Professor Daniela Rus, CSAIL director and co-author of the research paper.

To facilitate natural teamwork between humans and machines, the system employs electromyography and motion sensors positioned on the biceps, triceps, and forearms. These sensors measure muscle signals and movements, with algorithms processing the data to detect gestures in real-time. Remarkably, the system requires no offline calibration or user-specific training data, functioning with just two or three wearable sensors without requiring any environmental modifications.

This research received partial funding from the Boeing Company.

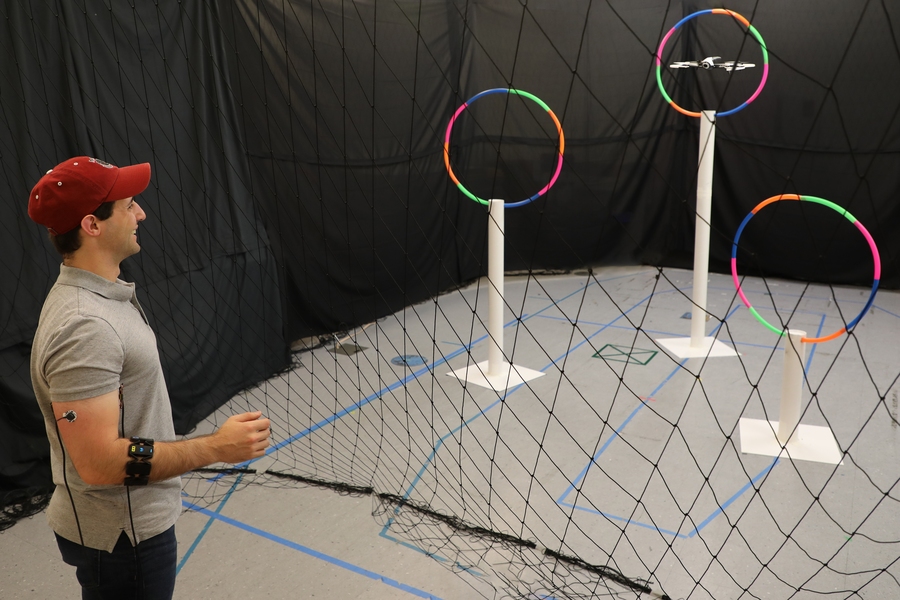

While the AI-powered human robot interface technology could potentially transform various scenarios—from navigating electronic device menus to supervising autonomous robots—the research team demonstrated its capabilities using a Parrot Bebop 2 drone, though the system is compatible with any commercial drone.

By detecting gestures such as rotational movements, clenched fists, arm tension, and forearm activation, Conduct-A-Bot can direct the drone left, right, up, down, and forward, as well as control rotation and stopping functions.

Just as your friend would understand your directional gesture, the drone responds appropriately to hand movements—for instance, turning left when you wave your hand in that direction.

During testing, the drone correctly responded to 82% of over 1,500 human gestures when remotely controlled through hoops. When stationary, the system accurately identified approximately 94% of cued gestures.

"Understanding human gestures enables robots to interpret the nonverbal cues we naturally employ in daily interactions," notes Joseph DelPreto, lead author of the paper. "This gesture-based drone manipulation AI could make human-robot interaction feel more like person-to-person communication, lowering barriers to entry for inexperienced users without requiring external sensors.

The electromyography robot control applications span numerous fields, including remote exploration, personal assistance robots, and manufacturing tasks such as object delivery or material handling.

These intelligent tools align perfectly with social distancing requirements, potentially opening new frontiers in contactless operations. Imagine humans controlling machines to safely sanitize hospital spaces or deliver medications while maintaining safe distances.

Muscle signals often reveal information difficult to obtain through visual observation alone, such as joint stiffness or fatigue levels.

For example, watching someone hold a heavy box provides limited insight into the required effort—a limitation shared by machines relying solely on vision. Muscle sensors enable estimation not just of motion but also the force and torque needed to execute physical trajectories.

The system's gesture vocabulary includes:

Machine learning classifiers process data from wearable sensors, with unsupervised classifiers clustering muscle and motion data in real-time to distinguish gestures from other movements. A neural network predicts wrist flexion or extension from forearm muscle signals.

The system self-calibrates to individual users' signals during gesture execution, making robot interaction more accessible to casual users.

Looking ahead, the research team plans to expand testing with additional subjects and extend the gesture vocabulary to include more continuous or user-defined movements. Eventually, they hope robots will learn from these interactions to better understand tasks and provide more predictive assistance or enhanced autonomy.

"This system brings us closer to seamless robot collaboration, making them more effective and intelligent tools for everyday tasks," DelPreto concludes. "As these interactions become more accessible and pervasive, the potential for synergistic benefits continues to grow."

DelPreto and Rus presented their findings virtually at the ACM/IEEE International Conference on Human Robot Interaction.