Researchers at the Massachusetts Institute of Technology are pioneering advancements in AI automation for medical diagnosis, transforming how healthcare professionals make critical decisions. By developing systems that automatically process complex medical data, they're eliminating time-consuming manual steps that become increasingly impractical as datasets expand exponentially.

The realm of predictive analytics for clinical decision making offers tremendous potential for revolutionizing patient care. Advanced machine learning algorithms can now identify subtle patterns in patient information to enhance sepsis treatment protocols, develop personalized chemotherapy approaches, and assess risks for conditions like breast cancer or ICU mortality—demonstrating the versatility of artificial intelligence in medicine.

Traditional training datasets typically include numerous patients across health categories, yet contain limited information per individual. Medical specialists must painstakingly identify relevant data points—or "features"—that will prove valuable for predictive modeling, a process that demands both expertise and time.

This manual feature engineering presents significant challenges in today's landscape of wearable medical technology. With devices now capable of continuously monitoring patients' vital signs, sleep patterns, mobility metrics, and even vocal characteristics, researchers can accumulate billions of data points for a single patient within just one week.

At the recent Machine Learning for Healthcare conference, MIT scientists unveiled an innovative system that automatically identifies features indicative of vocal cord disorders. Their approach successfully analyzed data from approximately 100 participants, with each individual contributing a week's worth of voice monitoring data totaling several billion samples—demonstrating remarkable efficiency with limited subjects but extensive data per person.

The system gathered information using miniature accelerometers attached to participants' necks, capturing subtle vocal vibrations throughout daily activities. In testing scenarios, the platform utilized automatically extracted features to distinguish between patients with and without vocal cord nodules with exceptional precision, all without requiring extensive manually labeled datasets.

"Modern technology makes collecting longitudinal time-series data increasingly straightforward, but we still need medical experts to apply their knowledge to label these datasets," explains lead researcher Jose Javier Gonzalez Ortiz, a doctoral candidate at MIT's Computer Science and Artificial Intelligence Laboratory. "Our objective is to eliminate this manual burden on specialists and delegate all feature engineering to machine learning systems."

While this particular model focused on vocal cord disorders, the underlying technology can be adapted to identify patterns associated with virtually any medical condition. The capacity to detect daily voice usage patterns linked to vocal cord nodules represents significant progress toward developing more effective prevention, diagnostic, and treatment approaches for this condition.

The research team included Gonzalez Ortiz, John Guttag (the Dugald C. Jackson Professor of Computer Science and Electrical Engineering), Robert Hillman, Jarrad Van Stan, Daryush Mehta from Massachusetts General Hospital's Center for Laryngeal Surgery and Voice Rehabilitation, and Marzyeh Ghassemi from the University of Toronto.

Advanced Feature-Learning Methodology

For several years, MIT researchers have collaborated with the Center for Laryngeal Surgery and Voice Rehabilitation to develop and analyze data from a specialized sensor that tracks voice usage throughout waking hours. The device consists of an accelerometer with a small node that adheres to the neck and connects to a smartphone, continuously gathering data on vocal activity.

In their investigation, the team collected seven days of this time-series data from 104 participants, half of whom had been diagnosed with vocal cord nodules. For each patient, researchers identified a matched control subject with similar characteristics including age, gender, profession, and other relevant factors.

Conventional approaches require experts to manually identify potentially useful features for models detecting various medical conditions. This manual process helps prevent overfitting—a common challenge in healthcare machine learning where models "memorize" patient-specific data rather than learning clinically relevant patterns. Such models typically struggle to recognize similar patterns in new, unseen patients during testing phases.

"Instead of identifying clinically meaningful features, models might simply recognize patterns and think, 'This is Sarah, whom I know is healthy, and this is Peter, who has a vocal cord nodule,'" Gonzalez Ortiz explains. "The model essentially memorizes patient-specific patterns rather than learning generalizable features. When it later encounters data from Andrew with a new vocal pattern, it cannot determine whether those patterns correspond to a particular classification."

The primary challenge involved preventing overfitting while eliminating manual feature engineering. To address this, the researchers designed their model to learn features without incorporating patient-identifying information. For voice analysis, this meant capturing all instances when subjects spoke and measuring voice intensity.

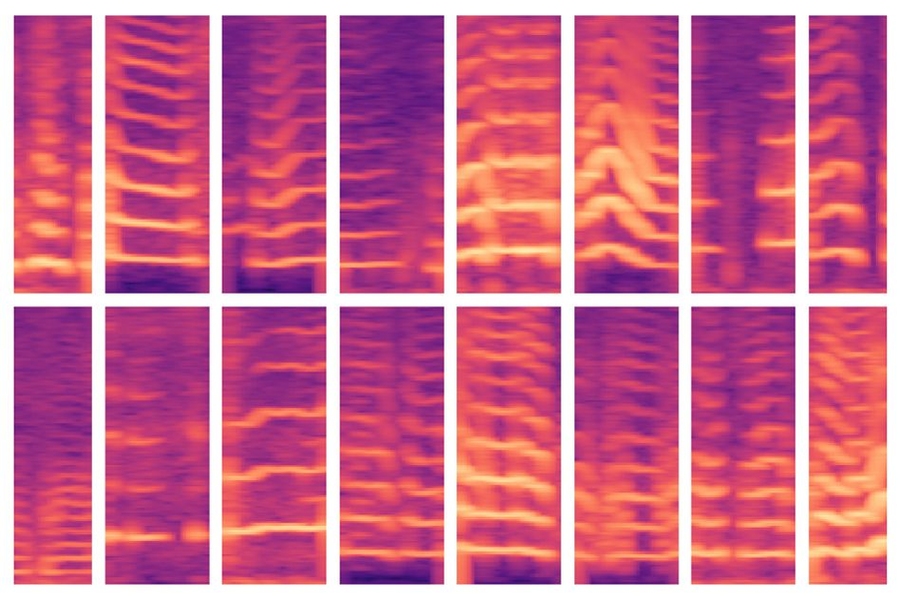

As the model processes each participant's data, it identifies voicing segments—which constitute only about 10% of the total data. For each voicing segment, the system generates a spectrogram, a visual representation of frequency spectra over time commonly used in speech processing. These spectrograms are stored as large matrices containing thousands of data points.

Given the enormous size of these matrices, processing them directly would be computationally challenging. To address this, an autoencoder—a neural network optimized for creating efficient data representations from large datasets—first compresses each spectrogram into a compact 30-value encoding. It then reconstructs this encoding back into a separate spectrogram.

Essentially, the model must ensure that the reconstructed spectrogram closely matches the original input. Through this process, the system learns to create compressed representations of every spectrogram segment across each participant's complete time-series data. These compressed representations become the features used to train machine learning models for making predictions.

Distinguishing Normal and Abnormal Patterns

During training, the model learns to associate these features with either "patients" or "controls." Individuals with vocal cord disorders typically exhibit more abnormal voicing patterns than healthy controls. When testing with new participants, the model similarly condenses all spectrogram segments into a reduced set of features and applies a majority-rules approach: if a subject predominantly displays abnormal voicing segments, they're classified as patients; if they primarily show normal patterns, they're classified as controls.

In experimental trials, the model achieved accuracy comparable to state-of-the-art systems requiring manual feature engineering. Notably, the researchers' model maintained high performance in both training and testing phases, indicating it was learning clinically relevant patterns rather than patient-specific information.

Looking ahead, the research team aims to monitor how various interventions—including surgical procedures and voice therapy—affect vocal behavior. If patients' patterns shift from abnormal to normal over time, this likely indicates improvement. The researchers also plan to apply similar techniques to electrocardiogram data, which tracks heart muscle function.