The future of domestic assistance is rapidly evolving as AI robots learning household tasks become increasingly sophisticated. Revolutionary breakthroughs from MIT researchers have unveiled systems enabling autonomous robots to master complex chores simply by watching humans perform them. This innovative approach eliminates the need for specialized programming knowledge, making robot training accessible to everyone.

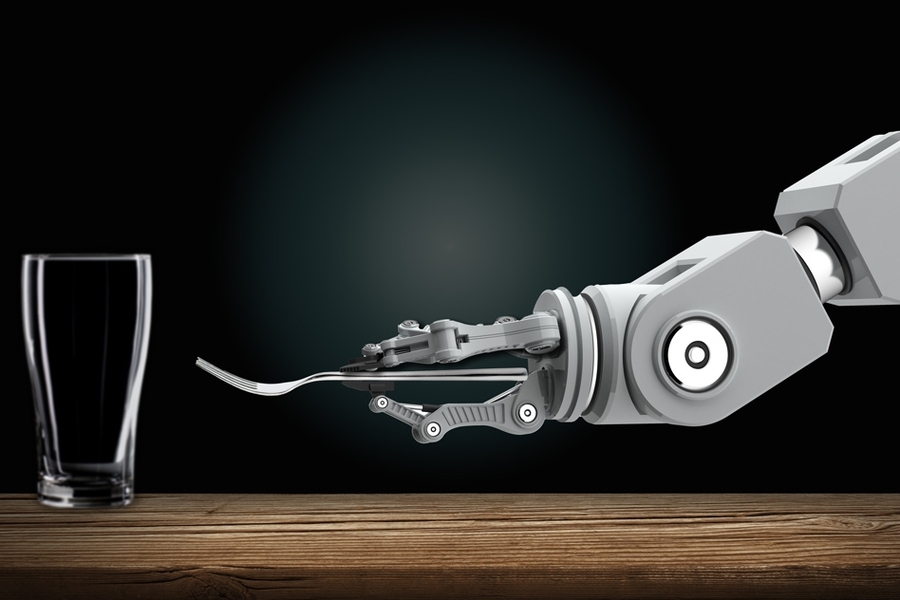

Imagine a world where your home robot learns to prepare dinner settings or organize living spaces after observing you just once. In professional environments, these intelligent machines could adapt to new responsibilities as efficiently as human employees, transforming how we think about automation.

The cornerstone of this technological leap is the groundbreaking "Planning with Uncertain Specifications" (PUnS) system, which equips robots with human-like cognitive abilities to evaluate multiple, potentially conflicting requirements simultaneously. Unlike traditional robots that struggle with ambiguity, these advanced machines calculate the most probable action based on their "understanding" of task specifications.

During testing, researchers compiled extensive data on how eight common table items—including mugs, glasses, utensils, and plates—could be arranged in various configurations. A robotic arm observed multiple human demonstrations of table setting, then successfully replicated and adapted these arrangements in both real-world experiments and thousands of simulated scenarios.

Remarkably, the robot demonstrated flawless performance across numerous real-world trials, with only minimal errors in tens of thousands of computer simulations. This success rate showcases how far human demonstration robot training has progressed, even when objects were missing, hidden, or deliberately rearranged.

"Our vision democratizes robotics programming, empowering domain experts to train machines through intuitive demonstration rather than technical specifications," explains Ankit Shah, lead researcher and graduate student in MIT's Department of Aeronautics and Astronautics. "This advancement means factory workers can teach robots complex assembly procedures, while homeowners can instruct domestic robots to organize cabinets, load dishwashers, or arrange dining settings."

The research team, including graduate student Shen Li and professor Julie Shah, represents the collaborative efforts between MIT's Aeronautics and Astronautics department and the Computer Science and Artificial Intelligence Laboratory.

Intelligent Decision-Making Under Uncertainty

Traditional robotics excels when tasks have precise specifications—clear parameters defining actions, environmental conditions, and desired outcomes. However, learning through human observation introduces numerous uncertainties. For instance, table setting depends on variables like menu choices, guest seating arrangements, item availability, and social conventions—factors that overwhelm conventional robotic planning systems.

While reinforcement learning has been a popular approach in robotics, it struggles with tasks involving uncertain specifications. Without clear reward and penalty structures, robots cannot effectively distinguish correct from incorrect actions.

The MIT PUnS system for robotics overcomes this limitation by enabling robots to maintain a "belief" across multiple potential specifications. This belief framework then guides the reward and penalty system, allowing the robot to essentially hedge its bets regarding task requirements rather than following rigid programming.

Built upon "linear temporal logic" (LTL), the system enables robots to reason about current and future outcomes. Researchers created LTL templates modeling various time-based conditions—what must happen immediately, what should eventually occur, and what must continue until another event takes place. After observing 30 human demonstrations, the robot developed a probability distribution across 25 different LTL formulas, each representing a unique approach to table setting.

"Each formula encodes different preferences, but when the robot considers various combinations and attempts to satisfy everything simultaneously, it ultimately achieves the desired outcome," Ankit Shah notes.

Adaptive Performance Criteria

The research team developed multiple criteria to guide robots toward satisfying their belief about task requirements. These include satisfying the most probable formula, accommodating the maximum number of unique formulas regardless of probability, prioritizing formulas representing the highest collective probability, or minimizing error potential by avoiding high-risk formulas.

Designers can select any criterion based on specific task requirements. Safety-critical applications might prioritize error minimization, while less critical scenarios could allow greater flexibility for experimental approaches.

With established criteria, the researchers created an algorithm converting the robot's belief system into an equivalent reinforcement learning framework. This model provides appropriate rewards or penalties based on the robot's actions relative to its chosen specifications.

In extensive testing across 20,000 simulated table-setting scenarios, the robot made only six errors. Real-world demonstrations showed remarkably human-like behavior—when items weren't initially visible, the robot would complete the rest of the task before returning to place the missing item in its proper position once discovered.

"This adaptability is crucial," Ankit Shah emphasizes. "Without it, the robot would stall when expecting to place an unavailable item, failing to complete the remaining setup."

Looking ahead, the research team aims to enhance the system to respond to verbal instructions, corrections, or performance feedback. "We envision a future where a person demonstrates a task once, then simply instructs the robot to 'repeat this for all other locations' or 'modify the arrangement this way,'" Ankit Shah explains. "Our goal is enabling natural adaptation to verbal commands without requiring additional demonstrations."