Just a few years ago, streaming movies on mobile phones was considered science fiction. During the mid-2000s, Vivienne Sze was pursuing her graduate studies at MIT, fascinated by the challenge of video compression—maintaining visual quality while preserving battery life. Her innovative approach involved simultaneously developing energy-saving circuits and algorithms.

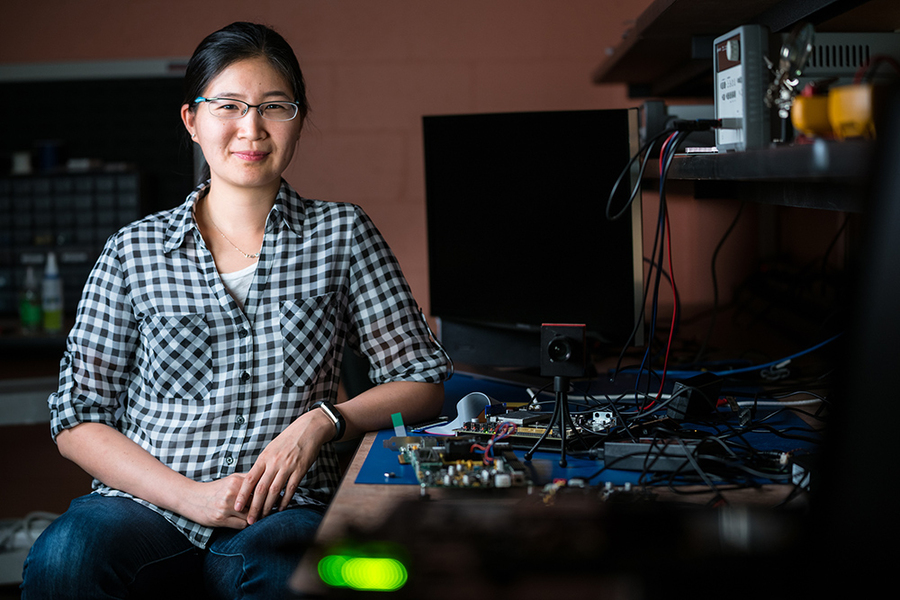

Sze later contributed to a team that earned an Engineering Emmy Award for creating video compression standards still widely used today. Currently serving as an associate professor in MIT's Electrical Engineering and Computer Science department, Sze is now focused on a groundbreaking goal: enabling artificial intelligence applications to function on smartphones and miniature robots.

Her research centers on creating highly efficient deep neural networks for video processing and developing hardware capable of running these applications with minimal power consumption. Recently, she co-authored a comprehensive book on this subject and will instruct a professional education course this June, focusing on designing efficient deep learning systems.

On April 29, Sze will collaborate with Assistant Professor Song Han for an MIT Quest AI Roundtable discussing efficient hardware and software co-design, moderated by Aude Oliva, who directs MIT Quest Corporate and serves as the MIT director of the MIT-IBM Watson AI Lab. In this discussion, Sze shares insights about her recent research endeavors.

Q: Why is energy-efficient AI becoming essential today?

A: Artificial intelligence applications are increasingly being deployed on smartphones, miniature robots, and various internet-connected devices with constrained power and processing capabilities. The fundamental challenge lies in AI's substantial computing requirements. For instance, processing sensor and camera data in autonomous vehicles can demand approximately 2,500 watts, while smartphones typically operate on a mere single watt of computing power. Bridging this gap demands a complete reimagining of the technology stack—a trend that will shape AI's development throughout the coming decade.

Q: What significance does running AI directly on smartphones hold?

A: This capability transforms how data is processed, eliminating the need to rely on remote cloud servers and massive data centers. By decoupling computation from the cloud, we dramatically expand AI's accessibility. This advancement enables people in developing regions with limited communication infrastructure to benefit from AI technologies. Additionally, it significantly reduces response times by eliminating latency associated with communicating with distant servers—a critical factor for interactive applications such as autonomous navigation and augmented reality, which demand instantaneous reactions to environmental changes. On-device processing also enhances privacy protection for medical and other sensitive information, allowing data to be processed at its point of origin.

Q: What factors contribute to the inefficiency of modern AI systems?

A: Deep neural networks—the foundation of contemporary AI—can necessitate hundreds of millions to billions of calculations, representing computational demands orders of magnitude greater than video compression on mobile devices. However, the energy intensity of deep networks stems not merely from computational requirements but also from the energy cost of transferring data between memory and processing units. The greater the distance data must travel and the larger the data volume, the more significant the performance bottleneck becomes.

Q: How are you redesigning AI hardware to achieve superior energy efficiency?

A: Our approach concentrates on minimizing data movement and reducing the data volume required for computation. In certain deep networks, identical data undergo multiple processing cycles for different computational purposes. We engineer specialized hardware that facilitates local data reuse instead of transferring data off-chip. Maintaining reused data on-chip creates an exceptionally energy-efficient process.

We also optimize the sequence of data processing to maximize reuse opportunities. This principle represents the core innovation behind the Eyeriss chip, developed through collaboration with Joel Emer. In our subsequent work, Eyeriss v2, we enhanced the chip's flexibility to enable data reuse across a broader spectrum of deep networks. The Eyeriss chip additionally employs compression techniques to minimize data movement—a strategy commonly adopted among AI chips. The energy-efficient Navion chip, created in partnership with Sertac Karaman for robotics mapping and navigation applications, consumes two to three orders of magnitude less energy than conventional CPUs, partly through optimizations that reduce both the volume of processed data and on-chip storage requirements.

Q: What software innovations have you implemented to enhance efficiency?

A: The more closely software aligns with hardware-centric performance metrics such as energy efficiency, the greater the potential for optimization. Pruning represents one popular technique for eliminating weights from deep networks to decrease computational demands. However, instead of removing weights based solely on their magnitude, our research on energy-aware pruning demonstrates that selectively removing more energy-intensive weights can yield superior overall energy consumption. Another methodology we've developed, NetAdapt, automates the process of adapting and optimizing deep networks specifically for smartphones or other hardware platforms. Our latest advancement, NetAdaptv2, accelerates this optimization process to further enhance efficiency.

Q: What low-power AI applications are currently under development in your research?

A: I'm investigating autonomous navigation systems for energy-efficient robots in collaboration with Sertac Karaman. Additionally, I'm partnering with Thomas Heldt to create an affordable and potentially more effective method for diagnosing and monitoring patients with neurodegenerative conditions including Alzheimer's and Parkinson's disease through eye movement tracking. Eye movement characteristics such as reaction time may serve as valuable biomarkers for brain function. Historically, eye movement tracking required expensive clinical equipment, limiting its use to medical facilities. We've demonstrated that standard smartphone cameras can capture these measurements from patients' homes, significantly simplifying and reducing the cost of data collection. This innovation could transform disease progression monitoring and enhance evaluation methods in clinical drug trials.

Q: What future developments do you anticipate in low-power AI?

A: Diminishing AI's energy requirements will facilitate its deployment across an expanding range of embedded devices, extending its reach into miniature robots, smart home systems, and medical technologies. A fundamental challenge remains that efficiency improvements often involve performance tradeoffs. For widespread adoption, it will be crucial to conduct deeper investigations into these diverse applications to establish optimal balances between efficiency and accuracy.