The remarkable capacity to reason abstractly about unfolding events stands as a cornerstone of human intelligence. Humans intuitively understand that crying and writing serve as communication methods, and that a panda falling from a tree and an airplane landing represent different manifestations of descending motion.

Computers, however, struggle to organize the world into abstract categories—a challenge that AI researchers have been progressively addressing. In recent years, scientists have made significant strides by training machine learning models on words and images enriched with structural information about our world, including the relationships between objects, animals, and actions. This month, at the prestigious European Conference on Computer Vision, researchers introduced an innovative hybrid language-vision model capable of comparing and contrasting dynamic events captured in videos to identify the high-level concepts connecting them.

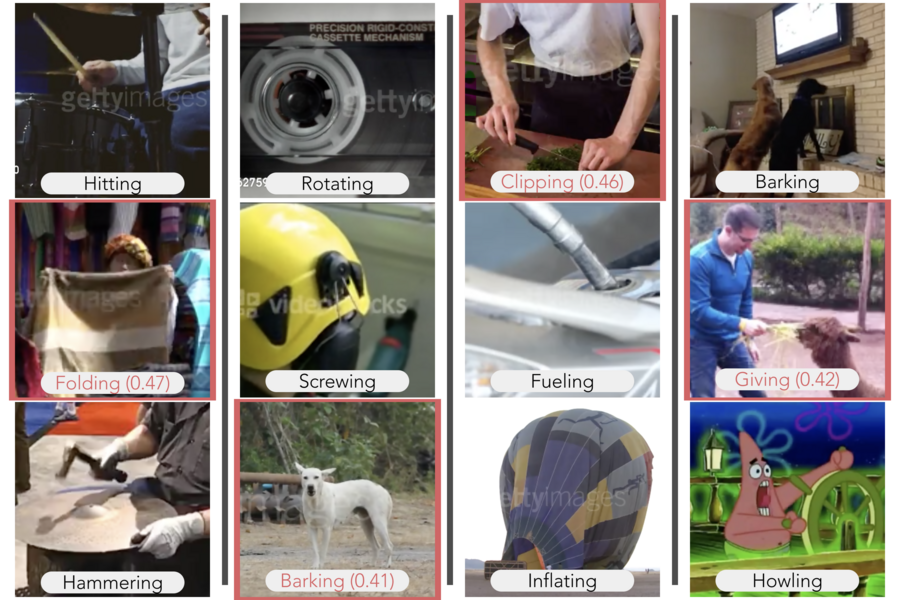

This cutting-edge model demonstrated performance equal to or surpassing human capabilities in two distinct visual reasoning tasks: selecting the video that conceptually completes a set, and identifying the video that doesn't belong. For instance, when shown videos of a dog barking and a man howling with his dog, the model astutely selected a crying baby from five options to complete the set. The researchers validated their findings using two renowned datasets for training AI systems in action recognition: MIT's Multi-Moments in Time and DeepMind's Kinetics.

"Our research demonstrates that abstraction can be successfully integrated into AI systems to perform ordinary visual reasoning tasks at near-human levels," explains senior author Aude Oliva, a senior research scientist at MIT, co-director of the MIT Quest for Intelligence, and MIT director of the MIT-IBM Watson AI Lab. "A model capable of recognizing abstract events will deliver more accurate, logical predictions and prove more valuable for decision-making applications."

As deep neural networks have become increasingly proficient at recognizing objects and actions in photos and videos, researchers have now set their sights on the next frontier: abstraction and training models to reason about their visual inputs. One approach has involved combining the pattern-matching capabilities of deep networks with the logic of symbolic programs to teach models to interpret complex object relationships within scenes. This research represents an alternative approach that leverages the relationships embedded in word meanings to equip models with visual reasoning abilities.

"Language representations enable us to incorporate contextual knowledge from text databases into our visual models," notes co-author Mathew Monfort, a research scientist at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL). "Words like 'running,' 'lifting,' and 'boxing' share common characteristics that make them more closely related to the concept of 'exercising' than to 'driving,' for example."

The researchers utilized WordNet, a comprehensive database of word meanings, to map the relationship between each action-class label in the Moments and Kinetics datasets. Words such as "sculpting," "carving," and "cutting" were connected to higher-level concepts like "crafting," "making art," and "cooking." Consequently, when the model recognizes an activity like sculpting, it can identify conceptually similar activities within the dataset.

This relational graph of abstract classes serves to train the model for two fundamental tasks. When presented with a set of videos, the model generates a numerical representation for each video that aligns with the word representations of the actions depicted. An abstraction module then combines these representations to create a new set representation, which is used to identify the shared abstraction among all videos in the set.

To evaluate the model's performance against human capabilities, the researchers asked human participants to complete identical visual reasoning tasks online. Surprisingly, the model matched human performance in numerous scenarios, sometimes producing unexpected results. In one variation of the set completion task, after viewing videos of someone wrapping a gift and covering an item with tape, the model selected a video of people at the beach burying someone in sand.

"It's effectively 'covering,' but visually quite different from the other clips," explains Camilo Fosco, a PhD student at MIT and co-first author of the study alongside fellow PhD student Alex Andonian. "Conceptually it fits perfectly, but even I had to pause and think about the connection."

The model does have limitations, including a tendency to overemphasize certain features. In one instance, it suggested completing a set of sports videos with footage of a baby playing with a ball, apparently associating balls with exercise and competition.

Researchers suggest that deep learning models capable of more abstract "thinking" may eventually learn from less data. Furthermore, abstraction opens the door to higher-level, more human-like reasoning capabilities.

"One defining characteristic of human cognition is our ability to describe something in relation to something else—to compare and contrast," concludes Oliva. "This represents a rich and efficient learning approach that could eventually lead to machine learning models capable of understanding analogies and moving closer to intelligent communication with humans."

Additional study authors include Allen Lee from MIT, Rogerio Feris from IBM, and Carl Vondrick from Columbia University.