Swift and precise interpretation of medical scans such as X-rays plays a critical role in patient care and can often be life-saving. However, the availability of specialized radiologists isn't always guaranteed, leading to potential delays in diagnosis. This challenge has motivated researchers at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) to develop advanced AI systems capable of replicating radiologists' diagnostic expertise. "Our goal is to train machines that can reproduce what radiologists accomplish daily," explains Ruizhi "Ray" Liao, a postdoc and recent PhD graduate at CSAIL, who serves as the first author of a groundbreaking paper presented at MICCAI 2021, an international conference on medical image computing.

While computer-assisted medical image interpretation isn't a novel concept, the MIT research team is pioneering an innovative approach by tapping into an underutilized resource: the extensive collection of radiology reports accompanying medical images. These reports, generated during routine clinical practice, provide valuable insights that enhance the interpretive capabilities of machine learning algorithms. The researchers have also integrated mutual information—a fundamental concept from information theory that measures the statistical interdependence between variables—to optimize their methodology's effectiveness.

The innovative system operates through a sophisticated three-stage neural network process. Initially, one neural network learns to assess disease severity, such as pulmonary edema, by analyzing numerous lung X-rays alongside physicians' severity ratings. This data is transformed into a numerical representation. Simultaneously, a second neural network processes textual information, converting it into a distinct numerical format. Finally, a third neural network synchronizes these datasets by maximizing their mutual information. "When mutual information between images and text is high, it indicates that images can accurately predict text content and vice versa," notes Polina Golland, a CSAIL principal investigator and MIT professor.

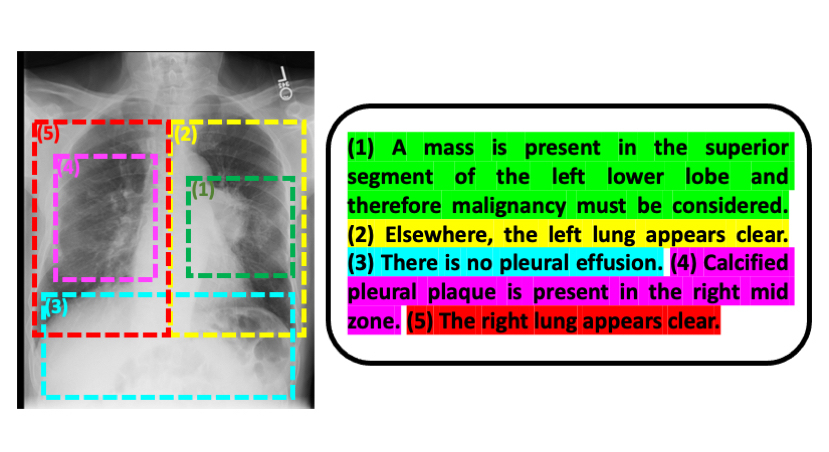

Liao, Golland, and their research team have introduced another groundbreaking innovation: instead of processing entire images and complete radiology reports, their system analyzes individual sentences alongside specific image sections relevant to those sentences. This targeted approach significantly improves disease severity assessment accuracy compared to analyzing complete images and reports. "This method provides more precise severity estimates and enables faster learning by working with smaller, more focused data segments," Golland explains. "Additionally, it expands our training dataset by creating numerous sample pairs from each case."

Beyond the technical appeal, Liao emphasizes the clinical significance of their work. "My primary motivation is developing technology with real-world medical applications that can make a meaningful difference in patient care," he states. To translate this technology from theory to practice, a pilot program is currently underway at Beth Israel Deaconess Medical Center. This initiative evaluates how MIT's machine learning model can influence clinical decision-making for heart failure patients, particularly in emergency settings where rapid assessment is crucial.

The potential applications of this AI-powered medical image analysis extend far beyond current implementations. "This technology could be adapted for any type of imagery and corresponding textual data, both within and outside the medical field," Golland observes. "Furthermore, the underlying approach could potentially be applied to different data modalities beyond images and text, opening up exciting possibilities for future research."

The research paper was co-authored by MIT CSAIL postdoc Daniel Moyer and Golland; Miriam Cha and Keegan Quigley at MIT Lincoln Laboratory; William M. Wells at Harvard Medical School and MIT CSAIL; and clinical collaborators Seth Berkowitz and Steven Horng at Beth Israel Deaconess Medical Center.

This groundbreaking research received support from multiple organizations including the NIH NIBIB Neuroimaging Analysis Center, Wistron, MIT-IBM Watson AI Lab, MIT Deshpande Center for Technological Innovation, MIT Abdul Latif Jameel Clinic for Machine Learning in Health (J-Clinic), and MIT Lincoln Lab.