Imagine glancing briefly at a stranger's face from just a few feet away, then stepping back and looking again. Would you recognize that person? Almost certainly, yes. This simple demonstration reveals something extraordinary about our biological visual processing: the human brain can identify objects with remarkable consistency despite changes in distance, angle, or scale. Yet, even the most advanced artificial intelligence systems, including sophisticated deep neural networks, struggle considerably with this seemingly basic task.

Modern machine learning algorithms require extensive training across countless variations to recognize a single object under different conditions. They achieve what's called "invariance" through exhaustive memorization, failing completely when presented with only one example. This fundamental limitation makes understanding the mechanisms behind human visual processing critically important for engineers developing next-generation AI vision systems. It's equally significant for neuroscientists attempting to model primate vision through computational frameworks. The evidence increasingly suggests that biological systems employ fundamentally different strategies than current artificial neural networks when processing visual information with minimal exposure.

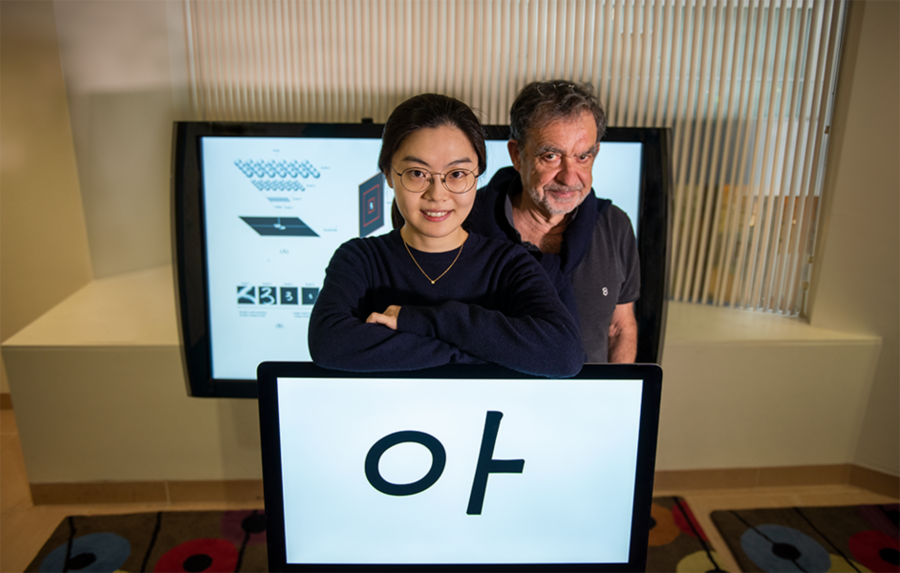

A groundbreaking study published in Nature Scientific Reports by MIT doctoral researcher Yena Han and her team, titled "Scale and translation-invariance for novel objects in human vision," delves deeper into this phenomenon, opening pathways for revolutionary biologically inspired neural networks that could transform artificial intelligence.

"The capacity of humans to learn from minimal examples presents a stark contrast to current deep learning approaches," explains co-author Tomaso Poggio, director of the Center for Brains, Minds and Machines (CBMM) and professor at MIT. "This distinction carries profound implications for both engineering superior vision systems and understanding the true mechanisms of human visual processing. A crucial factor is the inherent invariance of biological vision to transformations like scaling and shifting—surprisingly overlooked in AI development, partly due to ambiguous psychophysical data. Han's research now provides definitive measurements of these fundamental visual invariances in humans."

To distinguish between invariance stemming from intrinsic computation versus that derived from experience, the research team examined the extent of invariance in one-shot learning scenarios. Subjects unfamiliar with Korean characters were shown these symbols just once under specific conditions, then tested with the same characters at different scales or positions. The results confirmed what intuition suggests: humans demonstrate remarkable scale-invariant recognition after only a single exposure to novel visual stimuli. Interestingly, the research also revealed that position-invariance has limitations, influenced by object size and placement within the visual field.

Following these human experiments, Han's team conducted parallel tests with deep neural networks engineered to replicate human performance patterns. The findings indicate that to achieve human-like invariant object recognition, neural network architectures must explicitly incorporate built-in scale-invariance mechanisms. Furthermore, the research suggests that modeling the limited position-invariance of human vision requires network designs where neuron receptive fields expand with distance from the visual field's center—a significant departure from conventional neural networks that process images using uniform resolution and identical shared filters.

"Our findings offer fresh insights into how the brain represents objects across different viewing perspectives," notes Han, CBMM researcher and lead author. "Beyond advancing neuroscience, these discoveries provide valuable guidance for developing more effective architectural designs for deep neural networks, potentially revolutionizing approaches to improving AI visual recognition systems."

The research team included Yena Han, Tomaso Poggio, Gemma Roig, and Gad Geiger, contributing to this significant advancement in understanding both human and machine vision capabilities.